3 Week 3: Dictionary-Based Approaches

Slides

- 4 Dictionary-Based Approaches (link or in Perusall)

3.1 Setup

As always, we first load the packages that we’ll be using:

library(tidyverse) # for wrangling data

library(tidylog) # to know what we are wrangling

library(tidytext) # for 'tidy' manipulation of text data

library(textdata) # text datasets

library(quanteda) # tokenization power house

library(quanteda.textstats)

# Requires installing through devtools:

# devtools::install_github("kbenoit/quanteda.dictionaries")

library(quanteda.dictionaries)

library(wesanderson) # to prettify

library(knitr) # for displaying data in html format (relevant for formatting this worksheet mainly)3.2 Get Data:

For this example, we will be using data from Ventura et al. (2021) - Connective effervescence and streaming chat during political debates.

## text_id

## 1 1

## 2 2

## 3 3

## 4 4

## 5 5

## 6 6

## comments

## 1 MORE:\n The coronavirus pandemic's impact on the race will be on display as the\n two candidates won't partake in a handshake, customary at the top of \nsuch events. The size of the audience will also be limited. https://abcn.ws/3kVyl16

## 2 God please bless all Trump supporters. They need it for they know not what they do

## 3 Trump is a living disaster! What an embarrassment to all human beings! The man is dangerous!

## 4 This debate is why other counties laugh at us. School yard class president debate at best.

## 5 OMG\n ... shut up tRump ... so rude and out of control. Obviously freaking \nout. This is a debate NOT a convention or a speech or your platform. \nLearn some manners

## 6 It’s\n hard to see what this country has become. The Presidency is no longer a\n respected position it has lost all of it’s integrity.

## id likes

## 1 ABC News 100

## 2 Anita Hill 61

## 3 Dave Garland 99

## 4 Carl Roy 47

## 5 Lynda Martin-Chambers 154

## 6 Nica Merchant 171

## debate

## 1 abc_first_debate_manual

## 2 abc_first_debate_manual

## 3 abc_first_debate_manual

## 4 abc_first_debate_manual

## 5 abc_first_debate_manual

## 6 abc_first_debate_manual3.3 Tokenization etc.

The comments are mostly clean, but you can check (on your own) whether they require additional cleaning. In the previous code, I showed you how to lowercase text, remove stopwords, etc., using quanteda. We can also do this using tidytext3:

# Tokenize and lightly clean the `comments` text using tidytext.

#

# What this does:

# 1) lowercases the text in `comments`

# 2) tokenizes into one-token-per-row using `unnest_tokens()`

# 3) keeps only tokens that contain at least one lowercase letter (regex check)

# 4) removes stopwords using the `stop_words` lexicon

tidy_ventura <- ventura_etal_df %>%

# Lowercase the raw comment text to standardize tokens (e.g., "Trump" -> "trump")

mutate(comments = tolower(comments)) %>%

# Tokenize: creates a new column `word` and drops the original `comments` column by default

unnest_tokens(word, comments) %>%

# Keep only tokens that contain at least one lowercase letter a–z

# (this removes many punctuation-only / number-only tokens; tweak as needed)

filter(str_detect(word, "[a-z]")) %>%

# Remove stopwords (common function words like "the", "and", etc.)

filter(!word %in% stop_words$word)## mutate: changed 29,261 values (99%) of 'comments' (0 new NAs)

## filter: removed 3,374 rows (1%), 494,341 rows remaining

## filter: removed 296,793 rows (60%), 197,548 rows remaining

head(tidy_ventura, 20)## text_id id likes

## 1 1 ABC News 100

## 2 1 ABC News 100

## 3 1 ABC News 100

## 4 1 ABC News 100

## 5 1 ABC News 100

## 6 1 ABC News 100

## 7 1 ABC News 100

## 8 1 ABC News 100

## 9 1 ABC News 100

## 10 1 ABC News 100

## 11 1 ABC News 100

## 12 1 ABC News 100

## 13 1 ABC News 100

## 14 1 ABC News 100

## 15 1 ABC News 100

## 16 1 ABC News 100

## 17 1 ABC News 100

## 18 2 Anita Hill 61

## 19 2 Anita Hill 61

## 20 2 Anita Hill 61

## debate word

## 1 abc_first_debate_manual coronavirus

## 2 abc_first_debate_manual pandemic's

## 3 abc_first_debate_manual impact

## 4 abc_first_debate_manual race

## 5 abc_first_debate_manual display

## 6 abc_first_debate_manual candidates

## 7 abc_first_debate_manual partake

## 8 abc_first_debate_manual handshake

## 9 abc_first_debate_manual customary

## 10 abc_first_debate_manual top

## 11 abc_first_debate_manual events

## 12 abc_first_debate_manual size

## 13 abc_first_debate_manual audience

## 14 abc_first_debate_manual limited

## 15 abc_first_debate_manual https

## 16 abc_first_debate_manual abcn.ws

## 17 abc_first_debate_manual 3kvyl16

## 18 abc_first_debate_manual god

## 19 abc_first_debate_manual bless

## 20 abc_first_debate_manual trump3.4 Keywords

We can detect the occurrence of the words trump and biden in each comment (text_id).

# Create a comment-level dataset indicating whether each comment mentions "trump" and/or "biden" at least once.

trump_biden <- tidy_ventura %>%

# Create token-level indicator variables (1 if the token is the target word, else 0)

mutate(

trump_token = ifelse(word == "trump", 1, 0),

biden_token = ifelse(word == "biden", 1, 0)

) %>%

# Aggregate within each comment (grouped by text_id) to mark whether the comment

# contains at least one occurrence of each target word

group_by(text_id) %>%

mutate(

trump_cmmnt = ifelse(sum(trump_token) > 0, 1, 0),

biden_cmmnt = ifelse(sum(biden_token) > 0, 1, 0)

) %>%

# Reduce to the unit of analysis: one row per comment (text_id)

distinct(text_id, .keep_all = TRUE) %>%

dplyr::select(text_id, trump_cmmnt, biden_cmmnt, likes, debate)## mutate: new variable 'trump_token' (double) with 2 unique values and 0% NA

## new variable 'biden_token' (double) with 2 unique values and 0% NA

## group_by: one grouping variable (text_id)

## mutate (grouped): new variable 'trump_cmmnt' (double) with 2 unique values and 0% NA

## new variable 'biden_cmmnt' (double) with 2 unique values and 0% NA

## distinct (grouped): removed 168,013 rows (85%), 29,535 rows remaining (removed 0 groups, 29,535 groups remaining)

head(trump_biden, 20)## # A tibble: 20 × 5

## # Groups: text_id [20]

## text_id trump_cmmnt biden_cmmnt likes debate

## <int> <dbl> <dbl> <int> <chr>

## 1 1 0 0 100 abc_fir…

## 2 2 1 0 61 abc_fir…

## 3 3 1 0 99 abc_fir…

## 4 4 0 0 47 abc_fir…

## 5 5 1 0 154 abc_fir…

## 6 6 0 0 171 abc_fir…

## 7 7 0 0 79 abc_fir…

## 8 8 0 0 39 abc_fir…

## 9 9 0 0 53 abc_fir…

## 10 10 0 0 36 abc_fir…

## 11 11 1 0 41 abc_fir…

## 12 12 0 0 28 abc_fir…

## 13 13 1 0 54 abc_fir…

## 14 14 0 0 30 abc_fir…

## 15 15 1 0 27 abc_fir…

## 16 16 1 1 31 abc_fir…

## 17 17 1 0 35 abc_fir…

## 18 18 1 1 32 abc_fir…

## 19 19 0 0 34 abc_fir…

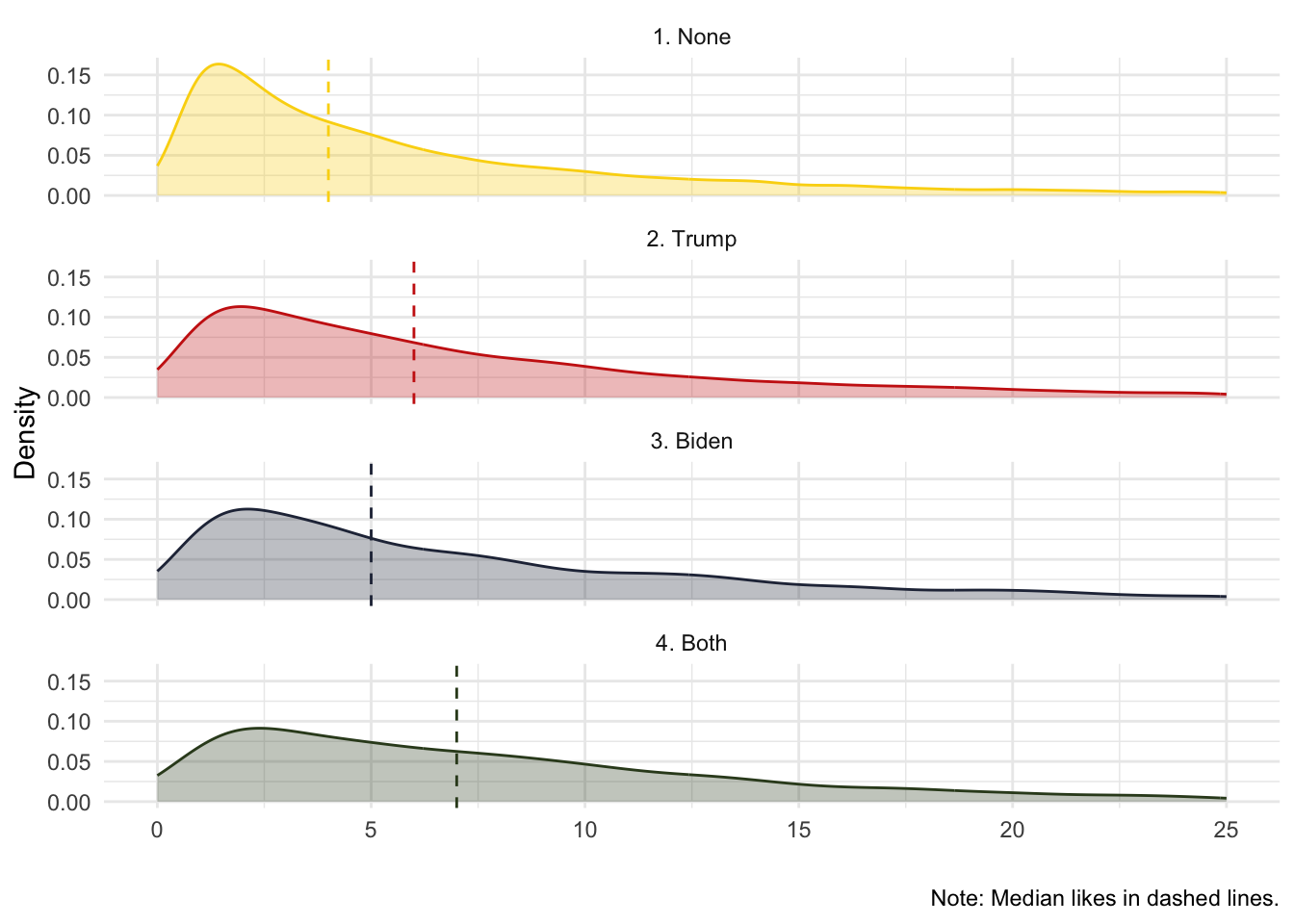

## 20 20 1 0 37 abc_fir…Rather than replicating the results from Figure 3 in Ventura et al. (2021), we will estimate the median number of likes that comments mentioning Trump, Biden, both, or neither receive:

trump_biden %>%

# Create categories

mutate(mentions_cat = ifelse(trump_cmmnt==0 & biden_cmmnt==0, "1. None", NA),

mentions_cat = ifelse(trump_cmmnt==1 & biden_cmmnt==0, "2. Trump", mentions_cat),

mentions_cat = ifelse(trump_cmmnt==0 & biden_cmmnt==1, "3. Biden", mentions_cat),

mentions_cat = ifelse(trump_cmmnt==1 & biden_cmmnt==1, "4. Both", mentions_cat)) %>%

# Remove the ones people like too much

filter(likes < 26) %>%

group_by(mentions_cat) %>%

mutate(likes_mean = median(likes, na.rm = T)) %>%

ungroup() %>%

# Plot densities by category, with dashed median lines

ggplot(aes(x=likes,fill = mentions_cat, color = mentions_cat)) +

geom_density(alpha = 0.3) +

scale_color_manual(values = wes_palette("BottleRocket2")) +

scale_fill_manual(values = wes_palette("BottleRocket2")) +

facet_wrap(~mentions_cat, ncol = 1) +

theme_minimal() +

geom_vline(aes(xintercept = likes_mean, color = mentions_cat), linetype = "dashed")+

theme(legend.position="none") +

labs(x="", y = "Density", color = "", fill = "",

caption = "Note: Median likes in dashed lines.")## mutate (grouped): new variable 'mentions_cat' (character) with 4 unique values and 0% NA

## filter (grouped): removed 8,136 rows (28%), 21,399 rows remaining (removed 8,136 groups, 21,399 groups remaining)

## group_by: one grouping variable (mentions_cat)

## mutate (grouped): new variable 'likes_mean' (double) with 3 unique values and 0% NA

## ungroup: no grouping variables remain

And we can also see if there are differences across news media:

trump_biden %>%

# Create categories

mutate(mentions_cat = ifelse(trump_cmmnt==0 & biden_cmmnt==0, "1. None", NA),

mentions_cat = ifelse(trump_cmmnt==1 & biden_cmmnt==0, "2. Trump", mentions_cat),

mentions_cat = ifelse(trump_cmmnt==0 & biden_cmmnt==1, "3. Biden", mentions_cat),

mentions_cat = ifelse(trump_cmmnt==1 & biden_cmmnt==1, "4. Both", mentions_cat),

media = ifelse(str_detect(debate, "abc"), "ABC", NA),

media = ifelse(str_detect(debate, "nbc"), "NBC", media),

media = ifelse(str_detect(debate, "fox"), "FOX", media)) %>%

# Remove the ones people like too much

filter(likes < 26) %>%

group_by(mentions_cat,media) %>%

mutate(median_like = median(likes,na.rm = T)) %>%

ungroup() %>%

# Plot

ggplot(aes(x=likes,fill = mentions_cat, color = mentions_cat)) +

geom_density(alpha = 0.3) +

scale_color_manual(values = wes_palette("BottleRocket2")) +

scale_fill_manual(values = wes_palette("BottleRocket2")) +

facet_wrap(~media, ncol = 1) +

geom_vline(aes(xintercept = median_like, color = mentions_cat), linetype = "dashed")+

theme_minimal() +

theme(legend.position="bottom") +

labs(x="", y = "Density", color = "", fill = "",

caption = "Note: Median likes in dashed lines.")## mutate (grouped): new variable 'mentions_cat' (character) with 4 unique values and 0% NA

## new variable 'media' (character) with 3 unique values and 0% NA

## filter (grouped): removed 8,136 rows (28%), 21,399 rows remaining (removed 8,136 groups, 21,399 groups remaining)

## group_by: 2 grouping variables (mentions_cat, media)

## mutate (grouped): new variable 'median_like' (double) with 6 unique values and 0% NA

## ungroup: no grouping variables remain

Similar to Young and Soroka (2012), we can also explore our keywords of interest in context. This is a good way to validate our proposed measure (e.g., is mentioning trump a reflection of interest, or simply relevance?).

# Create a quanteda corpus from the Ventura et al. dataset.

# - text_field indicates which column contains the text to treat as documents.

# - unique_docnames ensures each document is assigned a unique ID.

corpus_ventura <- corpus(

ventura_etal_df,

text_field = "comments",

unique_docnames = TRUE

)

# Tokenize the corpus so we can use token-based tools like kwic()

toks_ventura <- tokens(corpus_ventura)

# Extract "keywords in context" (KWIC) for occurrences of "Trump"

# This returns the keyword plus a window of surrounding tokens.

kw_trump <- kwic(toks_ventura, pattern = "Trump")

# Inspect the first 20 KWIC results

# (The number of tokens before/after the keyword is controlled by the window size in kwic().)

head(kw_trump, 20)## Keyword-in-context with 20 matches.

## [text2, 5] God please bless all | Trump

## [text3, 1] | Trump

## [text5, 7] ... shut up | tRump

## [text11, 11] a bad opiate problem then | trump

## [text13, 4] This is a | TRUMP

## [text15, 1] | Trump

## [text16, 8] this SO much better than | Trump

## [text17, 3] I love | Trump

## [text18, 4] Biden is right | Trump

## [text20, 1] | Trump

## [text22, 12] being a decent human. | Trump

## [text23, 1] | Trump

## [text27, 11] for once, i wish | trump

## [text28, 10] it America... | Trump

## [text30, 1] | Trump

## [text31, 1] | Trump

## [text32, 1] | Trump

## [text32, 15] People open your eyes. | Trump

## [text34, 1] | Trump

## [text36, 1] | Trump

##

## | supporters. They need it

## | is a living disaster!

## | ... so rude

## | brings up about bidens son

## | all about ME debate and

## | is looking pretty flushed right

## | and I wasn’t even going

## | ! He is the best

## | doesn’t have a plan for

## | worse president EVER 😡 thank

## | doesn't know the meaning of

## | such a hateful person he

## | would shut his trap for

## | IS NOT smarter than a

## | has improved our economy and

## | has done so much harm

## | is a clown and after

## | is evil.

## | is so broke that is

## | is literally making this debateWe can also look for more than one word at the same time:

## Keyword-in-context with 20 matches.

## [text4, 17] yard class president debate at |

## [text10, 1] |

## [text17, 8] Trump! He is the |

## [text43, 6] This is gonna be the |

## [text81, 31] an incompetent President, the |

## [text81, 33] President, the worst, |

## [text81, 35] the worst, worst, |

## [text82, 11] was totally one sided! |

## [text86, 8] right - Trump is the |

## [text100, 9] !! BRAVO BRAVO THE |

## [text102, 4] Obama was the |

## [text119, 10] he said he would do |

## [text138, 13] think. He is the |

## [text141, 22] puppet could be? The |

## [text143, 6] Trump may not be the |

## [text158, 15] This man is a the |

## [text167, 3] He the |

## [text221, 34] by far have been the |

## [text221, 36] have been the worst, |

## [text221, 38] the worst, WORST, |

##

## best | .

## Worst | debate I’ve ever seen!

## best | president ever! Thank you

## best | show on TV in 4

## worst | , worst, worst in

## worst | , worst in history.

## worst | in history.

## Worst | ever! Our president kept

## worst | president America ever had!

## BEST | PRESIDENT OF THE WORLD.

## worst | president ever!!!

## Best | President ever Crybabies don't like

## worst | president ever

## worst | president in our time ever

## best | choice but I will choose

## worst | thing that has ever happened

## worst | president we had in the

## worst | , WORST, WORST PRESIDENT

## WORST | , WORST PRESIDENT!!

## WORST | PRESIDENT!!!Alternatively, we can examine which words most commonly occur together. These are called collocations (a concept closely related to n-grams). Here, we want to identify the most common names mentioned (first and last names).

toks_ventura <- tokens(corpus_ventura, remove_punct = TRUE)

col_ventura <- tokens_select(toks_ventura,

# Keep only tokens that start with a capital letter

pattern = "^[A-Z]",

valuetype = "regex",

case_insensitive = FALSE,

padding = TRUE) %>%

textstat_collocations(min_count = 20) # Minimum number of collocations to be taken into account.

head(col_ventura, 20)## collocation count count_nested length

## 1 chris wallace 1695 0 2

## 2 president trump 831 0 2

## 3 joe biden 431 0 2

## 4 fox news 267 0 2

## 5 mr president 152 0 2

## 6 united states 144 0 2

## 7 donald trump 141 0 2

## 8 mike pence 40 0 2

## 9 jo jorgensen 78 0 2

## 10 HE IS 43 0 2

## 11 democratic party 38 0 2

## 12 vice president 347 0 2

## 13 CHRIS WALLACE 38 0 2

## 14 PRESIDENT TRUMP 37 0 2

## 15 TRUMP IS 42 0 2

## 16 white house 47 0 2

## 17 african americans 35 0 2

## 18 JOE BIDEN 25 0 2

## 19 YOU ARE 27 0 2

## 20 IS NOT 34 0 2

## lambda z

## 1 6.752252 128.27065

## 2 3.747948 84.12773

## 3 3.389153 59.43303

## 4 8.943930 53.78327

## 5 4.985057 45.89307

## 6 12.106819 36.13493

## 7 4.735894 35.48376

## 8 8.952895 34.74457

## 9 10.969720 34.45690

## 10 6.205826 34.13557

## 11 9.093924 31.88407

## 12 8.555551 31.81775

## 13 9.634400 31.78949

## 14 5.845521 30.56607

## 15 5.197700 30.15608

## 16 11.387847 29.41946

## 17 7.750170 29.38751

## 18 7.534616 28.84871

## 19 6.651381 28.81572

## 20 5.508680 28.73548(The \(\lambda\) score is a measure of how strongly two words are associated, for example, how likely chris and wallace are to occur next to each other. For a complete explanation, you can read this paper.)

We can also discover collocations longer than two words. In the example below, we identify collocations consisting of three words.

# Identify frequent collocations (multi-word expressions) in the Ventura corpus.

# - tokens_select() is used here mainly to ensure settings are explicit:

# * case_insensitive = FALSE keeps case distinctions (e.g., "Trump" vs "trump")

# * padding = TRUE preserves token positions so collocations can be detected properly

# - textstat_collocations() finds statistically associated token sequences.

# * min_count sets a minimum frequency threshold (here: at least 100 occurrences)

# * size = 3 searches for 3-word collocations (trigrams)

col_ventura <- tokens_select(

toks_ventura,

case_insensitive = FALSE,

padding = TRUE

) %>%

textstat_collocations(min_count = 100, size = 3)

# Inspect the top 20 collocations

head(col_ventura, 20)## collocation count count_nested length

## 1 know how to 115 0 3

## 2 the american people 220 0 3

## 3 this is the 158 0 3

## 4 to do with 108 0 3

## 5 this debate is 167 0 3

## 6 is not a 139 0 3

## 7 wallace needs to 172 0 3

## 8 is the worst 110 0 3

## 9 is such a 107 0 3

## 10 trump is the 153 0 3

## 11 is a joke 248 0 3

## 12 trump has done 105 0 3

## 13 trump is a 322 0 3

## 14 this is not 119 0 3

## 15 trump needs to 131 0 3

## 16 what a joke 141 0 3

## 17 the united states 132 0 3

## 18 going to be 122 0 3

## 19 is going to 210 0 3

## 20 biden is a 164 0 3

## lambda z

## 1 3.098608190 11.32651308

## 2 2.602503749 10.16184037

## 3 1.393392161 9.01490638

## 4 4.010890138 7.21665079

## 5 0.994328169 6.14171313

## 6 0.796789305 6.08626604

## 7 1.635032948 4.63118161

## 8 1.840376131 3.63917670

## 9 0.776121492 2.54094422

## 10 0.280826175 2.53147919

## 11 2.096508909 2.53031226

## 12 0.644366045 2.27854315

## 13 0.204080417 2.01355366

## 14 0.447286359 1.99058988

## 15 0.577684570 1.93126531

## 16 2.376681732 1.67038242

## 17 0.738270253 1.43145851

## 18 1.918098701 1.35112904

## 19 0.101248917 0.60225753

## 20 0.001248882 0.010132713.5 Dictionary Approaches

We can extend the previous analysis by using dictionaries. You can create your own, use previously validated dictionaries, or use dictionaries that are already included in tidytext or quanteda (e.g., for sentiment analysis).

3.5.1 Sentiment Analysis

Let’s look at some pre-loaded sentiment dictionaries in tidytext:

-

AFFIN: measures sentiment with a numeric score between -5 and 5, and were validated in this paper.

get_sentiments("afinn")## # A tibble: 2,477 × 2

## word value

## <chr> <dbl>

## 1 abandon -2

## 2 abandoned -2

## 3 abandons -2

## 4 abducted -2

## 5 abduction -2

## 6 abductions -2

## 7 abhor -3

## 8 abhorred -3

## 9 abhorrent -3

## 10 abhors -3

## # ℹ 2,467 more rows-

bing: sentiment words found in online forums. More information here.

get_sentiments("bing")## # A tibble: 6,786 × 2

## word sentiment

## <chr> <chr>

## 1 2-faces negative

## 2 abnormal negative

## 3 abolish negative

## 4 abominable negative

## 5 abominably negative

## 6 abominate negative

## 7 abomination negative

## 8 abort negative

## 9 aborted negative

## 10 aborts negative

## # ℹ 6,776 more rows-

nrc: underpaid workers from Amazon mechanical Turk coded the emotional valence of a long list of terms, which were validated in this paper. Also, check this paper about MTurk, and why you shouldn’t trust data collected on MTurk.

get_sentiments("nrc")## # A tibble: 13,872 × 2

## word sentiment

## <chr> <chr>

## 1 abacus trust

## 2 abandon fear

## 3 abandon negative

## 4 abandon sadness

## 5 abandoned anger

## 6 abandoned fear

## 7 abandoned negative

## 8 abandoned sadness

## 9 abandonment anger

## 10 abandonment fear

## # ℹ 13,862 more rowsEach dictionary classifies and quantifies words in a different way. Let’s use the nrc sentiment dictionary to analyze our comments dataset. The nrc dictionary classifies, among other concepts, words as reflecting positive or negative sentiment.

nrc <- get_sentiments("nrc")

table(nrc$sentiment)##

## anger anticipation disgust

## 1245 837 1056

## fear joy negative

## 1474 687 3316

## positive sadness surprise

## 2308 1187 532

## trust

## 1230We will focus solely on positive or negative sentiment:

nrc_pos_neg <- get_sentiments("nrc") %>%

filter(sentiment == "positive" | sentiment == "negative")## filter: removed 8,248 rows (59%), 5,624 rows

## remaining

ventura_pos_neg <- tidy_ventura %>%

left_join(nrc_pos_neg)## Joining with `by = join_by(word)`

## left_join: added one column (sentiment)

## > rows only in x 147,204

## > rows only in nrc_pos_neg ( 3,402)

## > matched rows 52,059 (includes duplicates)

## > =========

## > rows total 199,263Let’s check the top positive words and the top negative words:

## group_by: one grouping variable (sentiment)

## count: now 14,242 rows and 3 columns, one group variable remaining (sentiment)## # A tibble: 14,242 × 3

## # Groups: sentiment [3]

## sentiment word n

## <chr> <chr> <int>

## 1 <NA> trump 11676

## 2 <NA> biden 7847

## 3 positive president 4920

## 4 <NA> wallace 4188

## 5 positive debate 2693

## 6 <NA> people 2591

## 7 <NA> chris 2559

## 8 <NA> joe 2380

## 9 <NA> country 1589

## 10 <NA> time 1226

## # ℹ 14,232 more rowsSome classifications make intuitive sense: “love” is positive and “bully” is negative. Others are less convincing: “talk” is positive? “joke” is negative? Some depend heavily on context: “vice” is negative, but vice president typically is not (especially since “president” is classified as “positive,” which… really?). And then “vote” is both positive and negative, which… what?

Let’s turn a blind eye for now (but, once again, see Grimmer et al., Chapter 15 for best practices).

Do people who watch different news media use different language? Let’s see what the data tell us. As always, check the unit of analysis in your dataset. In this case, each observation is a word, but we have a grouping variable for the comment (text_id), so we can count how many positive and negative words appear in each comment. We will calculate a net sentiment score by subtracting the number of negative words from the number of positive words (within each comment).

comment_pos_neg <- ventura_pos_neg %>%

# Create dummies of pos and neg for counting

mutate(pos_dum = ifelse(sentiment == "positive", 1, 0),

neg_dum = ifelse(sentiment == "negative", 1, 0)) %>%

# Estimate total number of tokens per comment, pos , and negs

group_by(text_id) %>%

mutate(total_words = n(),

total_pos = sum(pos_dum, na.rm = T),

total_neg = sum(neg_dum, na.rm = T)) %>%

# These values are aggregated at the text_id level so we can eliminate repeated text_id

distinct(text_id,.keep_all=TRUE) %>%

# Now we estimate the net sentiment score. You can change this and get a different way to measure the ratio of positive to negative

mutate(net_sent = total_pos - total_neg) %>%

ungroup() ## mutate: new variable 'pos_dum' (double) with 3 unique values and 74% NA

## new variable 'neg_dum' (double) with 3 unique values and 74% NA

## group_by: one grouping variable (text_id)

## mutate (grouped): new variable 'total_words' (integer) with 25 unique values and 0% NA

## new variable 'total_pos' (double) with 14 unique values and 0% NA

## new variable 'total_neg' (double) with 10 unique values and 0% NA

## distinct (grouped): removed 169,728 rows (85%), 29,535 rows remaining (removed 0 groups, 29,535 groups remaining)

## mutate (grouped): new variable 'net_sent' (double) with 21 unique values and 0% NA

## ungroup: no grouping variables remain

# Note that the `word` and `sentiment` columns are meaningless now

head(comment_pos_neg, 10)## # A tibble: 10 × 12

## text_id id likes debate word sentiment

## <int> <chr> <int> <chr> <chr> <chr>

## 1 1 ABC News 100 abc_f… coro… <NA>

## 2 2 Anita Hi… 61 abc_f… god positive

## 3 3 Dave Gar… 99 abc_f… trump <NA>

## 4 4 Carl Roy 47 abc_f… deba… positive

## 5 5 Lynda Ma… 154 abc_f… omg <NA>

## 6 6 Nica Mer… 171 abc_f… it’s <NA>

## 7 7 Connie S… 79 abc_f… happ… <NA>

## 8 8 Tammy Ei… 39 abc_f… expe… <NA>

## 9 9 Susan We… 53 abc_f… smart <NA>

## 10 10 Dana Spe… 36 abc_f… worst <NA>

## # ℹ 6 more variables: pos_dum <dbl>,

## # neg_dum <dbl>, total_words <int>,

## # total_pos <dbl>, total_neg <dbl>,

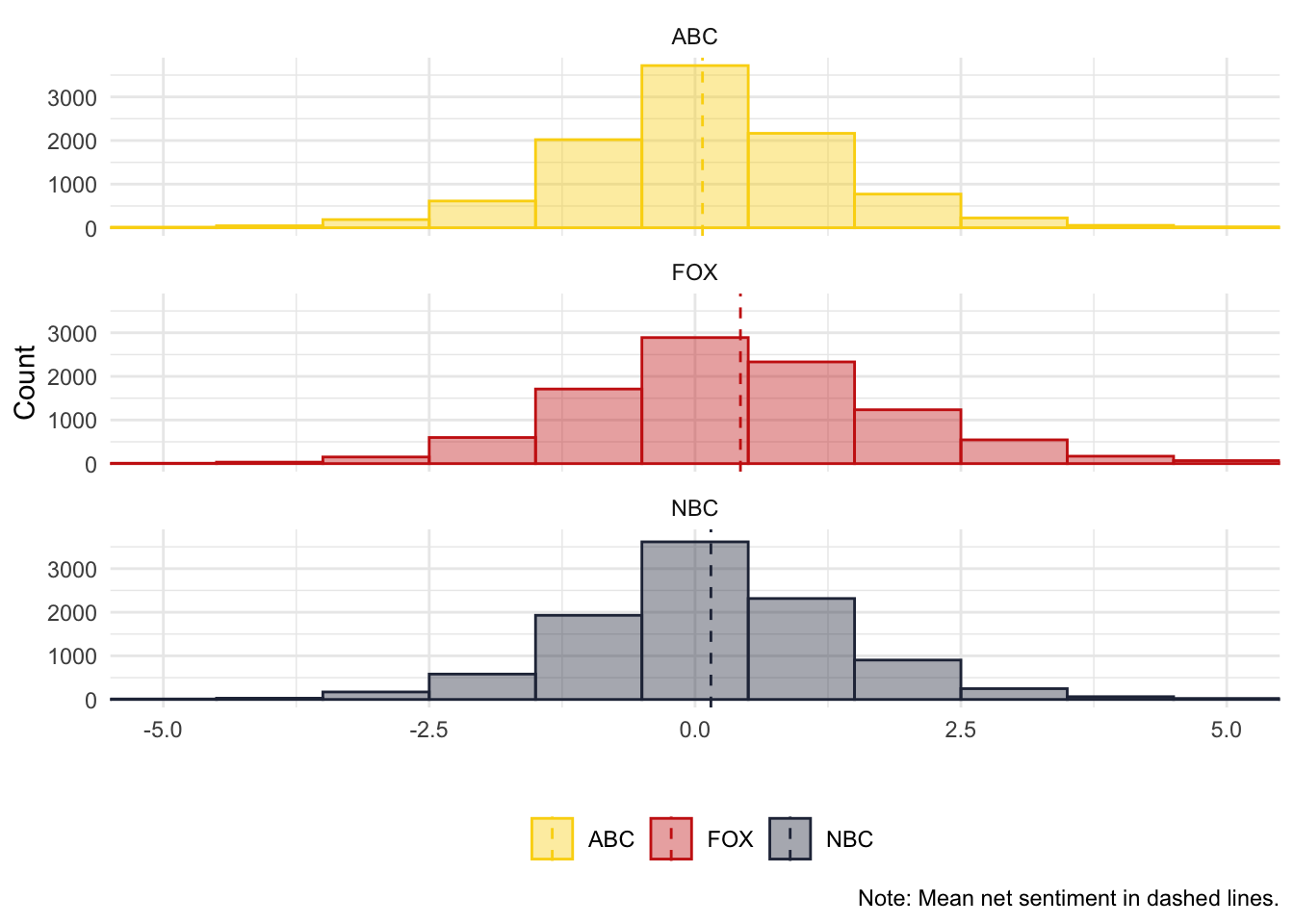

## # net_sent <dbl>Ok, now we can plot the differences:

comment_pos_neg %>%

# Create categories

mutate(media = ifelse(str_detect(debate, "abc"), "ABC", NA),

media = ifelse(str_detect(debate, "nbc"), "NBC", media),

media = ifelse(str_detect(debate, "fox"), "FOX", media)) %>%

group_by(media) %>%

mutate(median_sent = mean(net_sent)) %>%

ggplot(aes(x=net_sent,color=media,fill=media)) +

geom_histogram(alpha = 0.4,

binwidth = 1) +

scale_color_manual(values = wes_palette("BottleRocket2")) +

scale_fill_manual(values = wes_palette("BottleRocket2")) +

facet_wrap(~media, ncol = 1) +

geom_vline(aes(xintercept = median_sent, color = media), linetype = "dashed")+

theme_minimal() +

theme(legend.position="bottom") +

coord_cartesian(xlim = c(-5,5)) +

labs(x="", y = "Count", color = "", fill = "",

caption = "Note: Mean net sentiment in dashed lines.")## mutate: new variable 'media' (character) with 3 unique values and 0% NA

## group_by: one grouping variable (media)

## mutate (grouped): new variable 'median_sent' (double) with 3 unique values and 0% NA

3.5.2 Domain-Specific Dictionaries

Sentiment dictionaries are common, but you can build a dictionary for any concept you’re interested in. As long as you can create a lexicon (and validate it), you can conduct an analysis similar to the one we just carried out. This time, rather than using an off-the-shelf sentiment dictionary, we will create our own. Let’s try a dictionary for two topics: the economy and migration.

As long as the dictionary has the same structure as our nrc_pos_neg object, we can follow the same process we used for the sentiment dictionary.

# Define two simple, domain-specific dictionaries (lexicons) for:

# 1) the economy and 2) migration.

# Each dictionary is a two-column data frame with:

# - word: the token to match in the text

# - topic: the category/label we want to assign when that token appears

economy <- cbind.data.frame(

c("economy", "taxes", "inflation", "debt", "employment", "jobs"),

"economy"

)

colnames(economy) <- c("word", "topic")

migration <- cbind.data.frame(

c("immigrants", "border", "wall", "alien", "migrant", "visa", "daca", "dreamer"),

"migration"

)

colnames(migration) <- c("word", "topic")

# Combine the two topic-specific lexicons into a single dictionary object

dict <- rbind.data.frame(economy, migration)

# Inspect the resulting dictionary

dict## word topic

## 1 economy economy

## 2 taxes economy

## 3 inflation economy

## 4 debt economy

## 5 employment economy

## 6 jobs economy

## 7 immigrants migration

## 8 border migration

## 9 wall migration

## 10 alien migration

## 11 migrant migration

## 12 visa migration

## 13 daca migration

## 14 dreamer migrationLet’s see if we find some of these words in our comments:

ventura_topic <- tidy_ventura %>%

left_join(dict)## Joining with `by = join_by(word)`

## left_join: added one column (topic)

## > rows only in x 196,175

## > rows only in dict ( 3)

## > matched rows 1,373

## > =========

## > rows total 197,548## filter: removed 196,175 rows (99%), 1,373 rows remaining

## group_by: one grouping variable (topic)

## count: now 11 rows and 3 columns, one group variable remaining (topic)## # A tibble: 11 × 3

## # Groups: topic [2]

## topic word n

## <chr> <chr> <int>

## 1 economy taxes 680

## 2 economy economy 328

## 3 economy jobs 273

## 4 migration wall 34

## 5 economy debt 32

## 6 migration immigrants 12

## 7 migration border 7

## 8 economy employment 3

## 9 migration alien 2

## 10 migration daca 1

## 11 migration visa 1Not that many. Note that we did not stem or lemmatize our corpus, so if we want to capture “job” and “jobs,” we need to include both in our dictionary. In other words, any preprocessing steps we apply to the corpus should also be applied to the dictionary.

If you are a bit more versed in R, you will notice that dictionaries are often represented as lists. quanteda understands dictionaries as lists, so we can build them that way and use its liwcalike() function to find matching words in text. An added benefit is that we can use glob patterns to capture variations of the same word (e.g., job* will match “job,” “jobs,” and “jobless”).

dict <- dictionary(list(economy = c("econom*","tax*","inflation","debt*","employ*","job*"),

immigration = c("immigrant*","border","wall","alien","migrant*","visa*","daca","dreamer*")))

# liwcalike lowercases input text

ventura_topics <- liwcalike(ventura_etal_df$comments,

dictionary = dict)

# liwcalike keeps the order so we can cbind them directly

topics <- cbind.data.frame(ventura_etal_df,ventura_topics)

# Look only at the comments that mention the economy and immigration

head(topics[topics$economy>0 & topics$immigration>0,])## text_id

## 4998 4999

## 6475 6477

## 8098 8113

## 12331 32211

## 14345 34225

## 19889 62164

## comments

## 4998 Trump is going to create jobs to finish that wall, hows that working for ya? I don’t see Mexico paying for it either

## 6475 Trump is trash illegal immigrants pay more taxes than this man and you guys support this broke failure con billionaire

## 8098 $750.00 in taxes in two years????? BUT HE'S ALL OVER THE PLACE INSULTING IMMIGRANTS WHO PAID MORE IN TAXES!!!

## 12331 Ask\n Biden how much he will raise taxes to pay for all the things he says he\n is going to provide everyone - including illegal immigrants!

## 14345 Trump has been living the life and does not care for the hard working American...His taxes are not the only rip off...Investigate Wall Money...

## 19889 Vote trump out. He needs to pay taxes too ... immigrants pay more than that thief

## id likes

## 4998 Ellen Lustic NA

## 6475 Kevin G Vazquez 1

## 8098 Prince M Dorbor 1

## 12331 Lynne Basista Shine 6

## 14345 RJ Jimenez 4

## 19889 Nicole Brennan 13

## debate docname Segment

## 4998 abc_first_debate_manual text4998 4998

## 6475 abc_first_debate_manual text6475 6475

## 8098 abc_first_debate_manual text8098 8098

## 12331 fox_first_debate_manual text12331 12331

## 14345 fox_first_debate_manual text14345 14345

## 19889 nbc_first_debate_manual text19889 19889

## WPS WC Sixltr Dic economy

## 4998 12.50000 25 4.00 8.00 4.00

## 6475 20.00000 20 25.00 10.00 5.00

## 8098 14.00000 28 7.14 10.71 7.14

## 12331 27.00000 27 18.52 7.41 3.70

## 14345 11.66667 35 8.57 5.71 2.86

## 19889 9.50000 19 5.26 10.53 5.26

## immigration AllPunc Period Comma Colon

## 4998 4.00 12.00 0.00 4 0

## 6475 5.00 0.00 0.00 0 0

## 8098 3.57 35.71 3.57 0 0

## 12331 3.70 7.41 0.00 0 0

## 14345 2.86 25.71 25.71 0 0

## 19889 5.26 21.05 21.05 0 0

## SemiC QMark Exclam Dash Quote Apostro

## 4998 0 4.00 0.00 0.0 4.00 4.00

## 6475 0 0.00 0.00 0.0 0.00 0.00

## 8098 0 17.86 10.71 0.0 3.57 3.57

## 12331 0 0.00 3.70 3.7 0.00 0.00

## 14345 0 0.00 0.00 0.0 0.00 0.00

## 19889 0 0.00 0.00 0.0 0.00 0.00

## Parenth OtherP

## 4998 0 8.00

## 6475 0 0.00

## 8098 0 35.71

## 12331 0 3.70

## 14345 0 25.71

## 19889 0 21.05The output provides some interesting information. First, economy and immigration give us the percentage of words in the text that match our economy or immigration dictionaries. In general, we would not expect many words in a sentence to reference, for example, “jobs” for us to conclude that the sentence is about the economy. So, any value above 0% can be interpreted as mentioning the economy (unless you have theoretical reasons to treat, say, 3% as meaningfully different from 2%). For the remaining variables:

-

WPS: Words per sentence. -

WC: Word count. -

Sixltr: Six-letter words (%). -

Dic: % of words in the dictionary. -

Allpunct: % of all punctuation marks. -

PeriodtoOtherP: % of specific punctuation marks.

With this information, we can identify which users focus more on each topic:

## mutate: new variable 'media' (character) with 3 unique values and 0% NA

## new variable 'economy_dum' (double) with 2 unique values and 0% NA

## new variable 'immigration_dum' (double) with 2 unique values and 0% NA

## group_by: one grouping variable (media)

## mutate (grouped): new variable 'pct_econ' (double) with 3 unique values and 0% NA

## new variable 'pct_migr' (double) with 3 unique values and 0% NA

## distinct (grouped): removed 29,544 rows (>99%), 3 rows remaining (removed 0 groups, 3 groups remaining)| media | pct_econ | pct_migr |

|---|---|---|

| ABC | 0.0641299 | 0.0030441 |

| FOX | 0.0856325 | 0.0008175 |

| NBC | 0.0708661 | 0.0018171 |

3.5.3 Using Pre-Built Dictionaries

So far, we have seen how to apply pre-loaded dictionaries (e.g., sentiment) and how to apply our own dictionaries. What if you have a pre-built dictionary that you want to apply to your corpus? As long as the dictionary has the correct structure, we can use the techniques we have applied so far. This also means that you may need to do some data wrangling, since pre-built dictionaries come in many formats.

Let’s use the NRC Affect Intensity Lexicon (created by the same team behind the pre-loaded nrc sentiment dictionary). The NRC Affect Intensity Lexicon measures the intensity of an emotion on a scale from 0 (low) to 1 (high). For example, “defiance” has an anger intensity of 0.51, and “hate” has an anger intensity of 0.83.

intense_lex <- read.table(file = "data/NRC-AffectIntensity-Lexicon.txt", fill = TRUE,

header = TRUE)

head(intense_lex)## term score AffectDimension

## 1 outraged 0.964 anger

## 2 brutality 0.959 anger

## 3 hatred 0.953 anger

## 4 hateful 0.940 anger

## 5 terrorize 0.939 anger

## 6 infuriated 0.938 angerThis is more than a simple dictionary: the key advantage is that it provides an intensity score for each word, which gives us more variation in our analysis (e.g., instead of a binary anger/no-anger measure, we can analyze degrees of anger). We will use the tidytext approach to analyze degrees of “joy” in our corpus.

joy_lex <- intense_lex %>%

filter(AffectDimension=="joy") %>%

mutate(word=term) %>%

dplyr::select(word,AffectDimension,score)## filter: removed 4,546 rows (78%), 1,268 rows remaining

## mutate: new variable 'word' (character) with 1,268 unique values and 0% NA

ventura_joy <- tidy_ventura %>%

left_join(joy_lex) %>%

## Most of the comments have no joy words so we will change these NAs to 0 but this is an ad-hoc decision. This decision must be theoretically motivated and justified

mutate(score = ifelse(is.na(score),0,score))## Joining with `by = join_by(word)`

## left_join: added 2 columns (AffectDimension,

## score)

## > rows only in x 184,943

## > rows only in joy_lex ( 769)

## > matched rows 12,605

## > =========

## > rows total 197,548

## mutate: changed 184,943 values (94%) of 'score'

## (184,943 fewer NAs)

head(ventura_joy[ventura_joy$score>0,])## text_id id likes

## 18 2 Anita Hill 61

## 19 2 Anita Hill 61

## 23 3 Dave Garland 99

## 30 4 Carl Roy 47

## 64 8 Tammy Eisen 39

## 65 8 Tammy Eisen 39

## debate word

## 18 abc_first_debate_manual god

## 19 abc_first_debate_manual bless

## 23 abc_first_debate_manual living

## 30 abc_first_debate_manual laugh

## 64 abc_first_debate_manual experience

## 65 abc_first_debate_manual share

## AffectDimension score

## 18 joy 0.545

## 19 joy 0.561

## 23 joy 0.312

## 30 joy 0.891

## 64 joy 0.375

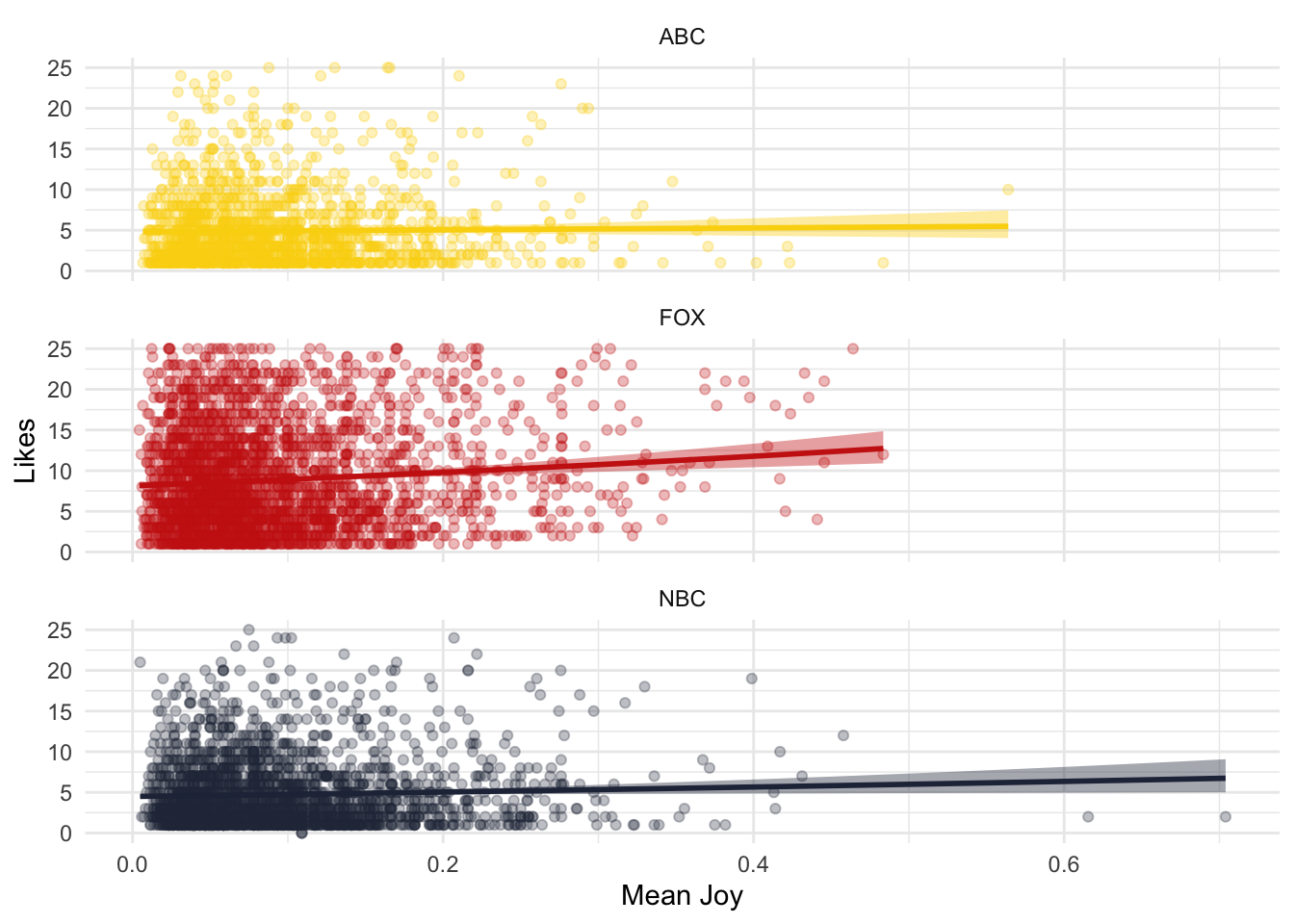

## 65 joy 0.438Now, we can see the relationship between likes and joy:

##

## Attaching package: 'MASS'## The following object is masked from 'package:tidylog':

##

## select## The following object is masked from 'package:dplyr':

##

## select

ventura_joy %>%

mutate(media = ifelse(str_detect(debate, "abc"), "ABC", NA),

media = ifelse(str_detect(debate, "nbc"), "NBC", media),

media = ifelse(str_detect(debate, "fox"), "FOX", media)) %>%

# Calculate mean joy in each comment

group_by(text_id) %>%

mutate(mean_joy = mean(score)) %>%

distinct(text_id,mean_joy,likes,media) %>%

ungroup() %>%

# Let's only look at comments that had SOME joy in them

filter(mean_joy > 0) %>%

# Remove the ones people like too much

filter(likes < 26) %>%

# Plot

ggplot(aes(x=mean_joy,y=likes,color=media,fill=media)) +

geom_point(alpha = 0.3) +

geom_smooth(method = "glm.nb") +

scale_color_manual(values = wes_palette("BottleRocket2")) +

scale_fill_manual(values = wes_palette("BottleRocket2")) +

facet_wrap(~media, ncol = 1) +

theme_minimal() +

theme(legend.position="none") +

labs(x="Mean Joy", y = "Likes", color = "", fill = "")## mutate: new variable 'media' (character) with 3

## unique values and 0% NA## group_by: one grouping variable (text_id)

## mutate (grouped): new variable 'mean_joy' (double) with 3,118 unique values and 0% NA

## distinct (grouped): removed 168,013 rows (85%), 29,535 rows remaining (removed 0 groups, 29,535 groups remaining)

## ungroup: no grouping variables remain

## filter: removed 20,355 rows (69%), 9,180 rows remaining

## filter: removed 2,518 rows (27%), 6,662 rows remaining

## `geom_smooth()` using formula = 'y ~ x'

Finally, for the sake of showing the process, I will write the code to load the dictionary using quanteda, but note that this approach loses all the intensity information.

affect_dict <- dictionary(list(anger = intense_lex$term[intense_lex$AffectDimension=="anger"],

fear = intense_lex$term[intense_lex$AffectDimension=="fear"],

joy = intense_lex$term[intense_lex$AffectDimension=="joy"],

sadness = intense_lex$term[intense_lex$AffectDimension=="sadness"]))

ventura_affect <- liwcalike(ventura_etal_df$comments,

dictionary = affect_dict)

# liwcalike keeps the order so we can cbind them directly

affect <- cbind.data.frame(ventura_etal_df,ventura_affect)

# Look only at the comments that have anger and fear

head(affect[affect$anger>0 & affect$fear>0,])## text_id

## 3 3

## 7 7

## 9 9

## 11 11

## 12 12

## 23 23

## comments

## 3 Trump is a living disaster! What an embarrassment to all human beings! The man is dangerous!

## 7 What happened to the days when it was a debate not a bully session! I am so ashamed of this administration!

## 9 ......\n a smart president? A thief, a con man, and a liar that has taken tax \npayers money to his own properties. A liar that knew the magnitude of \nthe virus and did not address it.

## 11 with\n the usa having such a bad opiate problem then trump brings up about \nbidens son is the most disgraceful thing any human being could do...vote\n him out

## 12 Trump’s\n only recourse in the debate is to demean his opponent and talk about \nwhat a great man he, himself is. Turn his mic off when it’s not his turn\n to speak. Nothing but babble!

## 23 Trump such a hateful person he has no moral or respect in a debate he blames everyone except him.

## id likes debate

## 3 Dave Garland 99 abc_first_debate_manual

## 7 Connie Sage 79 abc_first_debate_manual

## 9 Susan Weyant 53 abc_first_debate_manual

## 11 Lynn Kohler 41 abc_first_debate_manual

## 12 Jim Lape 28 abc_first_debate_manual

## 23 Joe Sonera 65 abc_first_debate_manual

## docname Segment WPS WC Sixltr Dic

## 3 text3 3 6.333333 19 15.79 36.84

## 7 text7 7 11.500000 23 17.39 17.39

## 9 text9 9 15.333333 46 8.70 13.04

## 11 text11 11 32.000000 32 6.25 28.12

## 12 text12 12 13.000000 39 12.82 5.13

## 23 text23 23 20.000000 20 15.00 25.00

## anger fear joy sadness AllPunc Period Comma

## 3 5.26 15.79 5.26 10.53 15.79 0.00 0.00

## 7 4.35 4.35 0.00 8.70 8.70 0.00 0.00

## 9 4.35 2.17 2.17 4.35 23.91 17.39 4.35

## 11 9.38 6.25 3.12 9.38 9.38 9.38 0.00

## 12 2.56 2.56 0.00 0.00 15.38 5.13 2.56

## 23 10.00 5.00 5.00 5.00 5.00 5.00 0.00

## Colon SemiC QMark Exclam Dash Quote Apostro

## 3 0 0 0.00 15.79 0 0.00 0.00

## 7 0 0 0.00 8.70 0 0.00 0.00

## 9 0 0 2.17 0.00 0 0.00 0.00

## 11 0 0 0.00 0.00 0 0.00 0.00

## 12 0 0 0.00 2.56 0 5.13 5.13

## 23 0 0 0.00 0.00 0 0.00 0.00

## Parenth OtherP

## 3 0 15.79

## 7 0 8.70

## 9 0 23.91

## 11 0 9.38

## 12 0 10.26

## 23 0 5.003.6 Assignments 1 - Due Date: EOD Friday Week 4

- Replicate the results from the left-most column of Figure 3 in Ventura et al. (2021).

- Look at the keywords in context for Biden in the

ventura_etal_dfdataset, and compare the results with the same data, but pre-processed (i.e., lower-case, remove stopwords, etc.). Which version provides more information about the context in which Biden appears in the comments? - Use a different collocation approach with the

ventura_etal_dfdataset, but pre-process the data (i.e., lower-case, remove stopwords, etc.). Which approach (pre-processed or not pre-processed) provides a better picture of the corpus or of the collocations you found? - Compare the positive sentiment of comments mentioning trump and comments mentioning biden using

bingandafinn. Note thatafinngives a numeric value, so you will need to choose a threshold to determine positive sentiment. - Using

bing, compare the sentiment of comments mentioning trump and comments mentioning biden using different metrics (e.g., Young and Soroka 2012, Martins and Baumard 2020, Ventura et al. 2021). - Create your own domain-specific dictionary and apply it to the

ventura_etal_dfdataset. Show the limitations of your dictionary (e.g., false positives), and comment on how much of a problem this would be if you wanted to conduct an analysis of this corpus.