6 Week 6: Topic Modeling (Unsupervised Learning II)

Slides

- 6 Scaling Techniques and Topic Modeling (link to slides)

6.1 K-Means Clustering

Before looking at the code for Structural Topic Models, let’s just think about the intuition behind the slightly more basic k-means clustering: K-means Intuition Building App

6.2 Setup

As always, we first load the packages that we’ll be using:

library(tidyverse) # for wrangling data

library(tidylog) # to know what we are wrangling

library(tidytext) # for 'tidy' manipulation of text data

library(quanteda) # tokenization power house

library(quanteda.textmodels)

library(stm) # run structural topic models

library(wesanderson) # to prettifyWe get the data from the inaugural speeches again.

## # A tibble: 6 × 4

## inaugSpeech Year President party

## <chr> <dbl> <chr> <chr>

## 1 "My Countrymen, It a rel… 1853 Pierce Demo…

## 2 "Fellow citizens, I appe… 1857 Buchanan Demo…

## 3 "Fellow-Citizens of the … 1861 Lincoln Repu…

## 4 "Fellow-Countrymen:\r\n\… 1865 Lincoln Repu…

## 5 "Citizens of the United … 1869 Grant Repu…

## 6 "Fellow-Citizens:\r\n\r\… 1873 Grant Repu…The text is pretty clean, so we can convert it into a corpus object, then into a dfm:

corpus_us_pres <- corpus(us_pres,

text_field = "inaugSpeech",

unique_docnames = TRUE)

summary(corpus_us_pres)## Corpus consisting of 41 documents, showing 41 documents:

##

## Text Types Tokens Sentences Year President

## text1 1164 3631 104 1853 Pierce

## text2 944 3080 89 1857 Buchanan

## text3 1074 3992 135 1861 Lincoln

## text4 359 774 26 1865 Lincoln

## text5 484 1223 40 1869 Grant

## text6 551 1469 43 1873 Grant

## text7 830 2698 59 1877 Hayes

## text8 1020 3206 111 1881 Garfield

## text9 675 1812 44 1885 Cleveland

## text10 1351 4720 157 1889 Harrison

## text11 821 2125 58 1893 Cleveland

## text12 1231 4345 130 1897 McKinley

## text13 854 2437 100 1901 McKinley

## text14 404 1079 33 1905 T Roosevelt

## text15 1437 5822 158 1909 Taft

## text16 658 1882 68 1913 Wilson

## text17 548 1648 59 1917 Wilson

## text18 1168 3717 148 1921 Harding

## text19 1220 4440 196 1925 Coolidge

## text20 1089 3855 158 1929 Hoover

## text21 742 2052 85 1933 FD Roosevelt

## text22 724 1981 96 1937 FD Roosevelt

## text23 525 1494 68 1941 FD Roosevelt

## text24 274 619 27 1945 FD Roosevelt

## text25 780 2495 116 1949 Truman

## text26 899 2729 119 1953 Eisenhower

## text27 620 1883 92 1957 Eisenhower

## text28 565 1516 52 1961 Kennedy

## text29 567 1697 93 1965 Johnson

## text30 742 2395 103 1969 Nixon

## text31 543 1978 68 1973 Nixon

## text32 527 1364 52 1977 Carter

## text33 902 2772 129 1981 Reagan

## text34 924 2897 124 1985 Reagan

## text35 795 2667 141 1989 Bush

## text36 642 1833 81 1993 Clinton

## text37 772 2423 111 1997 Clinton

## text38 620 1804 97 2001 Bush

## text39 773 2321 100 2005 Bush

## text40 937 2667 110 2009 Obama

## text41 814 2317 88 2013 Obama

## party

## Democrat

## Democrat

## Republican

## Republican

## Republican

## Republican

## Republican

## Republican

## Democrat

## Republican

## Democrat

## Republican

## Republican

## Republican

## Republican

## Democrat

## Democrat

## Republican

## Republican

## Republican

## Democrat

## Democrat

## Democrat

## Democrat

## Democrat

## Republican

## Republican

## Democrat

## Democrat

## Republican

## Republican

## Democrat

## Republican

## Republican

## Republican

## Democrat

## Democrat

## Republican

## Republican

## Democrat

## Democrat

# We do the whole tokenization sequence

toks_us_pres <- tokens(corpus_us_pres,

remove_numbers = TRUE, # Thinks about this

remove_punct = TRUE, # Remove punctuation!

remove_url = TRUE) # Might be helpful

toks_us_pres <- tokens_remove(toks_us_pres,

# Should we though? See Denny and Spirling (2018)

c(stopwords(language = "en")),

padding = F)

toks_us_pres <- tokens_wordstem(toks_us_pres, language = "en")

dfm_us_pres <- dfm(toks_us_pres)6.3 Structural Topic Models

STM provides two ways to include contextual information to “guide” model estimation. First, topic prevalence can vary by metadata (e.g., Republicans talk about military issues more than Democrats). Second, topic content can vary by metadata (e.g., Republicans talk about military issues differently from Democrats).

We can run STM using the stm package. The package includes a complete workflow (i.e., from raw text to figures), and if you are planning to use it in the future, I highly encourage you to check this, this, this, and this.

At a high level, stm() takes our dfm and produces topics. If we do not specify any prevalence terms, it will estimate an LDA-style model. Because this is a Bayesian approach, it is recommended that you set a seed value for replication. We also need to choose \(K\), the number of topics. How many topics is the “right” number? There is no good number. Too many pre-specified topics and the categories might be meaningless. Too few, and you might be piling together two or more topics. Note that changes to a) the number of topics, b) the prevalence term, c) the omitted words, d) the seed value, can (greatly) change the outcome. Here is where validation becomes crucial (for a review see Wilkerson and Casas 2017).

Using our presidential speeches data, I will use stm to estimate topics in inaugural addresses. As the prevalence term, I include the party of the speaker. I set the number of topics to 10 (but with a corpus this large, I would likely start around ~30 and work my way up from there).

stm_us_pres <- stm(dfm_us_pres, K = 10, seed = 1984,

prevalence = ~party,

init.type = "Spectral")## Beginning Spectral Initialization

## Calculating the gram matrix...

## Finding anchor words...

## ..........

## Recovering initialization...

## ..............................................

## Initialization complete.

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 1 (approx. per word bound = -7.071)

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 2 (approx. per word bound = -6.881, relative change = 2.687e-02)

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 3 (approx. per word bound = -6.819, relative change = 8.982e-03)

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 4 (approx. per word bound = -6.790, relative change = 4.255e-03)

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 5 (approx. per word bound = -6.780, relative change = 1.524e-03)

## Topic 1: us, new, world, nation, let

## Topic 2: new, can, us, nation, work

## Topic 3: constitut, state, union, can, law

## Topic 4: nation, must, us, peopl, can

## Topic 5: govern, peopl, upon, state, law

## Topic 6: nation, freedom, america, govern, peopl

## Topic 7: us, america, must, nation, american

## Topic 8: upon, nation, govern, peopl, shall

## Topic 9: world, nation, peopl, peac, can

## Topic 10: us, nation, govern, must, peopl

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 6 (approx. per word bound = -6.775, relative change = 7.006e-04)

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 7 (approx. per word bound = -6.771, relative change = 5.509e-04)

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 8 (approx. per word bound = -6.767, relative change = 5.381e-04)

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 9 (approx. per word bound = -6.765, relative change = 4.264e-04)

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 10 (approx. per word bound = -6.763, relative change = 2.933e-04)

## Topic 1: us, new, world, let, nation

## Topic 2: us, new, can, nation, work

## Topic 3: constitut, state, union, can, shall

## Topic 4: nation, must, peopl, us, world

## Topic 5: govern, peopl, upon, law, state

## Topic 6: nation, freedom, america, peopl, govern

## Topic 7: us, america, must, nation, american

## Topic 8: upon, nation, govern, peopl, can

## Topic 9: nation, world, peopl, peac, can

## Topic 10: us, govern, nation, peopl, must

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 11 (approx. per word bound = -6.761, relative change = 2.052e-04)

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 12 (approx. per word bound = -6.760, relative change = 1.718e-04)

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 13 (approx. per word bound = -6.759, relative change = 1.451e-04)

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 14 (approx. per word bound = -6.758, relative change = 1.120e-04)

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 15 (approx. per word bound = -6.758, relative change = 9.982e-05)

## Topic 1: us, new, let, world, nation

## Topic 2: us, new, can, nation, work

## Topic 3: constitut, state, union, can, shall

## Topic 4: nation, must, peopl, us, world

## Topic 5: govern, peopl, upon, law, state

## Topic 6: nation, freedom, america, peopl, govern

## Topic 7: us, america, must, nation, american

## Topic 8: upon, nation, govern, peopl, can

## Topic 9: nation, peopl, world, can, peac

## Topic 10: us, govern, nation, peopl, must

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 16 (approx. per word bound = -6.757, relative change = 1.013e-04)

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 17 (approx. per word bound = -6.756, relative change = 8.202e-05)

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 18 (approx. per word bound = -6.756, relative change = 6.758e-05)

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 19 (approx. per word bound = -6.756, relative change = 4.870e-05)

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 20 (approx. per word bound = -6.755, relative change = 3.674e-05)

## Topic 1: us, new, let, world, nation

## Topic 2: us, new, can, nation, work

## Topic 3: constitut, state, govern, peopl, shall

## Topic 4: nation, must, peopl, us, world

## Topic 5: govern, peopl, upon, law, state

## Topic 6: nation, freedom, america, peopl, govern

## Topic 7: us, must, america, nation, american

## Topic 8: upon, nation, govern, peopl, can

## Topic 9: nation, peopl, world, can, peac

## Topic 10: us, govern, nation, peopl, must

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 21 (approx. per word bound = -6.755, relative change = 3.567e-05)

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 22 (approx. per word bound = -6.755, relative change = 3.498e-05)

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 23 (approx. per word bound = -6.755, relative change = 3.208e-05)

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 24 (approx. per word bound = -6.754, relative change = 3.402e-05)

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 25 (approx. per word bound = -6.754, relative change = 3.194e-05)

## Topic 1: us, new, let, world, nation

## Topic 2: us, new, can, nation, work

## Topic 3: constitut, state, govern, peopl, shall

## Topic 4: nation, must, peopl, us, world

## Topic 5: govern, peopl, upon, law, state

## Topic 6: nation, freedom, america, govern, peopl

## Topic 7: us, must, america, nation, american

## Topic 8: upon, nation, govern, peopl, can

## Topic 9: nation, peopl, world, can, peac

## Topic 10: us, govern, nation, peopl, must

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 26 (approx. per word bound = -6.754, relative change = 2.748e-05)

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 27 (approx. per word bound = -6.754, relative change = 2.585e-05)

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 28 (approx. per word bound = -6.754, relative change = 2.739e-05)

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 29 (approx. per word bound = -6.753, relative change = 4.431e-05)

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 30 (approx. per word bound = -6.753, relative change = 2.313e-05)

## Topic 1: us, new, let, nation, world

## Topic 2: us, new, can, nation, work

## Topic 3: constitut, state, govern, peopl, shall

## Topic 4: nation, peopl, must, us, world

## Topic 5: govern, peopl, upon, law, state

## Topic 6: nation, freedom, america, govern, peopl

## Topic 7: us, must, america, nation, american

## Topic 8: upon, nation, govern, peopl, can

## Topic 9: nation, peopl, world, can, peac

## Topic 10: us, govern, nation, peopl, world

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 31 (approx. per word bound = -6.753, relative change = 1.789e-05)

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 32 (approx. per word bound = -6.753, relative change = 1.825e-05)

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 33 (approx. per word bound = -6.753, relative change = 1.667e-05)

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 34 (approx. per word bound = -6.753, relative change = 1.600e-05)

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 35 (approx. per word bound = -6.753, relative change = 1.738e-05)

## Topic 1: us, new, let, nation, world

## Topic 2: us, new, can, nation, work

## Topic 3: constitut, state, govern, peopl, shall

## Topic 4: nation, peopl, must, us, world

## Topic 5: govern, peopl, upon, law, state

## Topic 6: nation, freedom, america, govern, peopl

## Topic 7: us, must, america, nation, american

## Topic 8: upon, nation, govern, peopl, can

## Topic 9: nation, peopl, world, can, peac

## Topic 10: us, govern, nation, peopl, world

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 36 (approx. per word bound = -6.752, relative change = 1.979e-05)

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 37 (approx. per word bound = -6.752, relative change = 2.035e-05)

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 38 (approx. per word bound = -6.752, relative change = 1.686e-05)

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 39 (approx. per word bound = -6.752, relative change = 1.478e-05)

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 40 (approx. per word bound = -6.752, relative change = 1.269e-05)

## Topic 1: us, new, let, nation, world

## Topic 2: us, new, can, nation, work

## Topic 3: constitut, state, govern, peopl, shall

## Topic 4: nation, peopl, must, us, world

## Topic 5: govern, peopl, upon, law, state

## Topic 6: nation, freedom, america, govern, peopl

## Topic 7: us, must, america, nation, american

## Topic 8: upon, nation, govern, peopl, can

## Topic 9: nation, peopl, world, can, peac

## Topic 10: us, govern, nation, peopl, world

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 41 (approx. per word bound = -6.752, relative change = 1.409e-05)

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 42 (approx. per word bound = -6.752, relative change = 1.433e-05)

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 43 (approx. per word bound = -6.752, relative change = 2.066e-05)

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 44 (approx. per word bound = -6.752, relative change = 2.418e-05)

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Completing Iteration 45 (approx. per word bound = -6.751, relative change = 1.609e-05)

## Topic 1: us, new, let, nation, world

## Topic 2: us, new, can, nation, work

## Topic 3: constitut, state, govern, peopl, shall

## Topic 4: nation, peopl, must, us, world

## Topic 5: govern, peopl, upon, law, state

## Topic 6: nation, freedom, america, peopl, govern

## Topic 7: us, must, america, nation, american

## Topic 8: upon, nation, govern, peopl, can

## Topic 9: nation, peopl, world, can, peac

## Topic 10: us, govern, nation, peopl, world

## .........................................

## Completed E-Step (0 seconds).

## Completed M-Step.

## Model ConvergedThe nice thing about the stm() function is that it allows us to see, in “real time,” what is going on inside the black box. We can summarize the process as follows (this is similar to collapsed Gibbs sampling, which stm() sort of uses):

Go through each document and randomly assign each word in the document to one of the topics, \(\displaystyle t \in k\).

Notice that this random assignment already gives topic representations for all documents and word distributions for all topics (albeit not very good ones).

-

To improve these estimates, for each document \(\displaystyle W\), do the following:

3.1 Go through each word \(\displaystyle w\) in \(\displaystyle W\).

3.1.1 For each topic \(\displaystyle t\), compute two quantities:

3.1.1.1 \(\displaystyle p(t \mid W)\): the proportion of words in document \(\displaystyle W\) that are currently assigned to topic \(\displaystyle t\); and

3.1.1.2 \(\displaystyle p(w \mid t)\): the proportion of assignments to topic \(\displaystyle t\) (across all documents) that come from the word \(\displaystyle w\).

Reassign \(\displaystyle w\) to a new topic by choosing topic \(\displaystyle t\) with probability \(\displaystyle p(t \mid W)\, p(w \mid t)\). Under the generative model, this is essentially the probability that topic \(\displaystyle t\) generated word \(\displaystyle w\), so it makes sense to resample the current word’s topic using this probability. (I’m glossing over a couple of details here—most notably the use of priors/pseudocounts in these probabilities.)

3.1.1.3 In other words, at this step we assume that all topic assignments except for the current word are correct, and then update the assignment of the current word using our model of how documents are generated.

After repeating the previous step many times, you eventually reach a roughly steady state where the assignments are reasonably good. You can then use these assignments to estimate (a) the topic mixture of each document (by counting the proportion of words assigned to each topic within that document) and (b) the words associated with each topic (by counting the proportion of words assigned to each topic overall).

(This explanation was adapted from here.) Let’s explore the topics produced:

labelTopics(stm_us_pres)## Topic 1 Top Words:

## Highest Prob: us, new, let, nation, world, can, america

## FREX: let, centuri, togeth, dream, new, promis, weak

## Lift: 19th, 200th, 20th, adventur, angri, catch, caught

## Score: role, dream, abroad, third, explor, shape, proud

## Topic 2 Top Words:

## Highest Prob: us, new, can, nation, work, world, day

## FREX: friend, mr, thing, breez, blow, word, fact

## Lift: breez, addict, alloc, assistanc, bacteria, bicentenni, bipartisanship

## Score: breez, crucial, blow, manger, page, thank, sometim

## Topic 3 Top Words:

## Highest Prob: constitut, state, govern, peopl, shall, can, law

## FREX: case, constitut, slave, union, territori, slaveri, perpetu

## Lift: abli, acting, adduc, afloat, alleg, altern, anarchi

## Score: case, slaveri, territori, slave, invas, provis, fli

## Topic 4 Top Words:

## Highest Prob: nation, peopl, must, us, world, can, govern

## FREX: activ, republ, task, industri, confid, inspir, normal

## Lift: stricken, unshaken, abdic, abject, abnorm, acclaim, afresh

## Score: normal, activ, amid, readjust, self-reli, relationship, unshaken

## Topic 5 Top Words:

## Highest Prob: govern, peopl, upon, law, state, countri, nation

## FREX: revenu, tariff, offic, appoint, busi, proper, consider

## Lift: 15th, 30th, abey, aborigin, acquaint, actuat, acut

## Score: revenu, legisl, enforc, polici, negro, interst, tariff

## Topic 6 Top Words:

## Highest Prob: nation, freedom, america, peopl, govern, know, democraci

## FREX: democraci, ideal, million, liberti, freedom, came, seen

## Lift: aught, charta, clariti, compact, constrict, counti, dire

## Score: democraci, paint, magna, million, excus, encount, unlimit

## Topic 7 Top Words:

## Highest Prob: us, must, america, nation, american, world, peopl

## FREX: journey, stori, generat, storm, america, job, ideal

## Lift: winter, afghanistan, aids, alongsid, anchor, anybodi, apathi

## Score: stori, journey, job, capitol, storm, thank, drift

## Topic 8 Top Words:

## Highest Prob: upon, nation, govern, peopl, can, shall, great

## FREX: enforc, counsel, organ, island, thought, upon, integr

## Lift: creation, cuba, eighteenth, fast, adapt, aspect, cuban

## Score: enforc, island, cuba, counsel, organ, eighteenth, adapt

## Topic 9 Top Words:

## Highest Prob: nation, peopl, world, can, peac, must, free

## FREX: resourc, contribut, repres, everywher, result, free, europ

## Lift: display, joint, likewis, philosophi, abhor, absurd, accur

## Score: europ, philosophi, commun, contribut, precept, tax, program

## Topic 10 Top Words:

## Highest Prob: us, govern, nation, peopl, world, must, american

## FREX: weapon, tax, believ, hero, man, reduc, dream

## Lift: 50th, hearten, marker, masteri, mathia, penal, rocket

## Score: weapon, hero, monument, nuclear, spend, tax, sovietFREX weights words by both their overall frequency and how exclusive they are to the topic. Lift weights words by dividing by their frequency in other topics, which gives higher weight to words that appear less frequently elsewhere. Similar to Lift, Score divides the log frequency of a word in the topic by the log frequency of that word in other topics (Roberts et al. 2013). Bischof and Airoldi (2012) show the value of using FREX over the other measures.

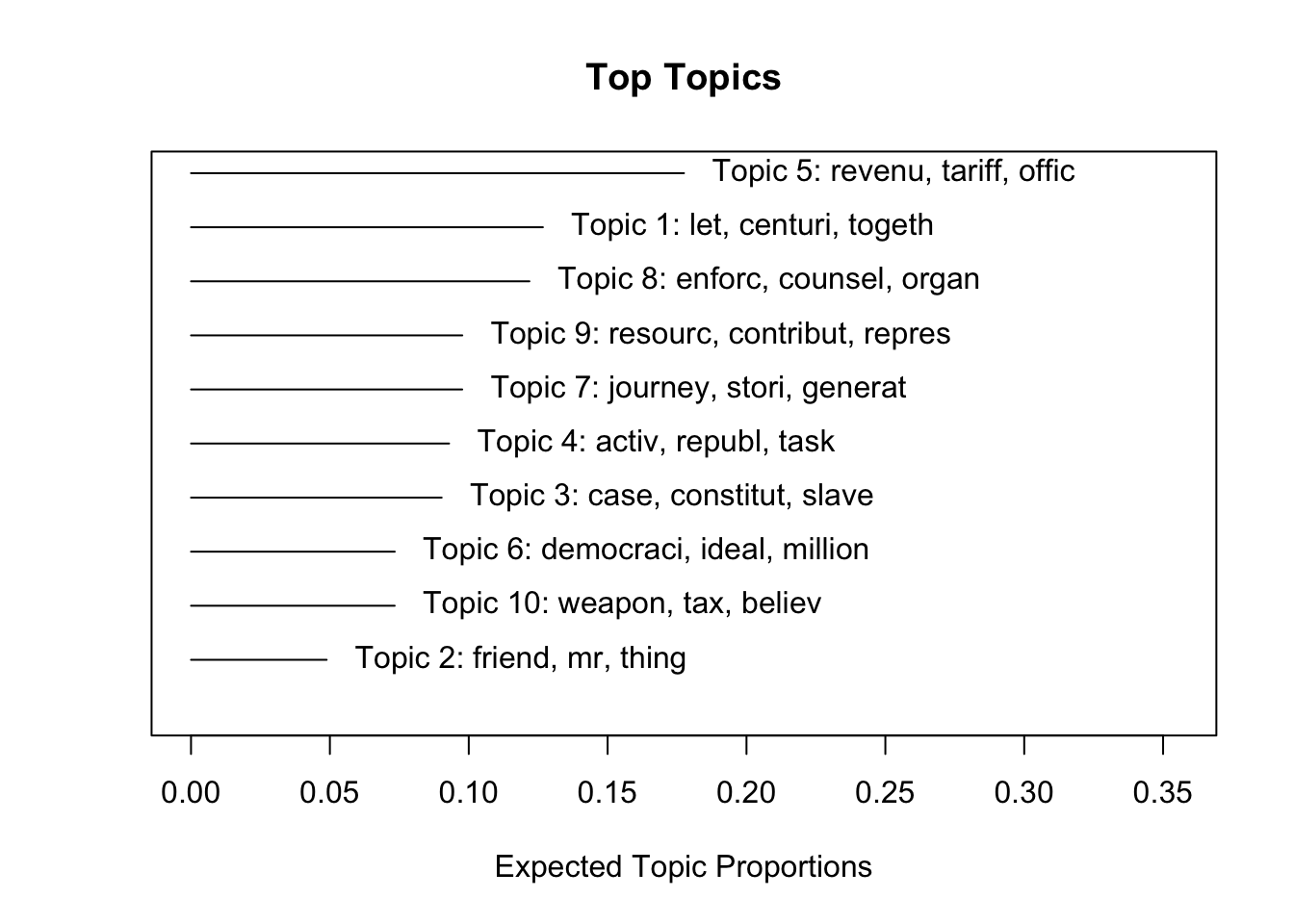

You can use the plot() function to show the topics.

plot(stm_us_pres, type = "summary", labeltype = "frex") # or prob, lift score

Topic 5 seems to be about the economy: revenue, tariffs, etc. Topic 3 about slavery adn the Civil War. If you want to see a sample of a specific topic:

findThoughts(stm_us_pres, texts = as.character(corpus_us_pres)[docnames(dfm_us_pres)], topics = 3) That is a long speech.

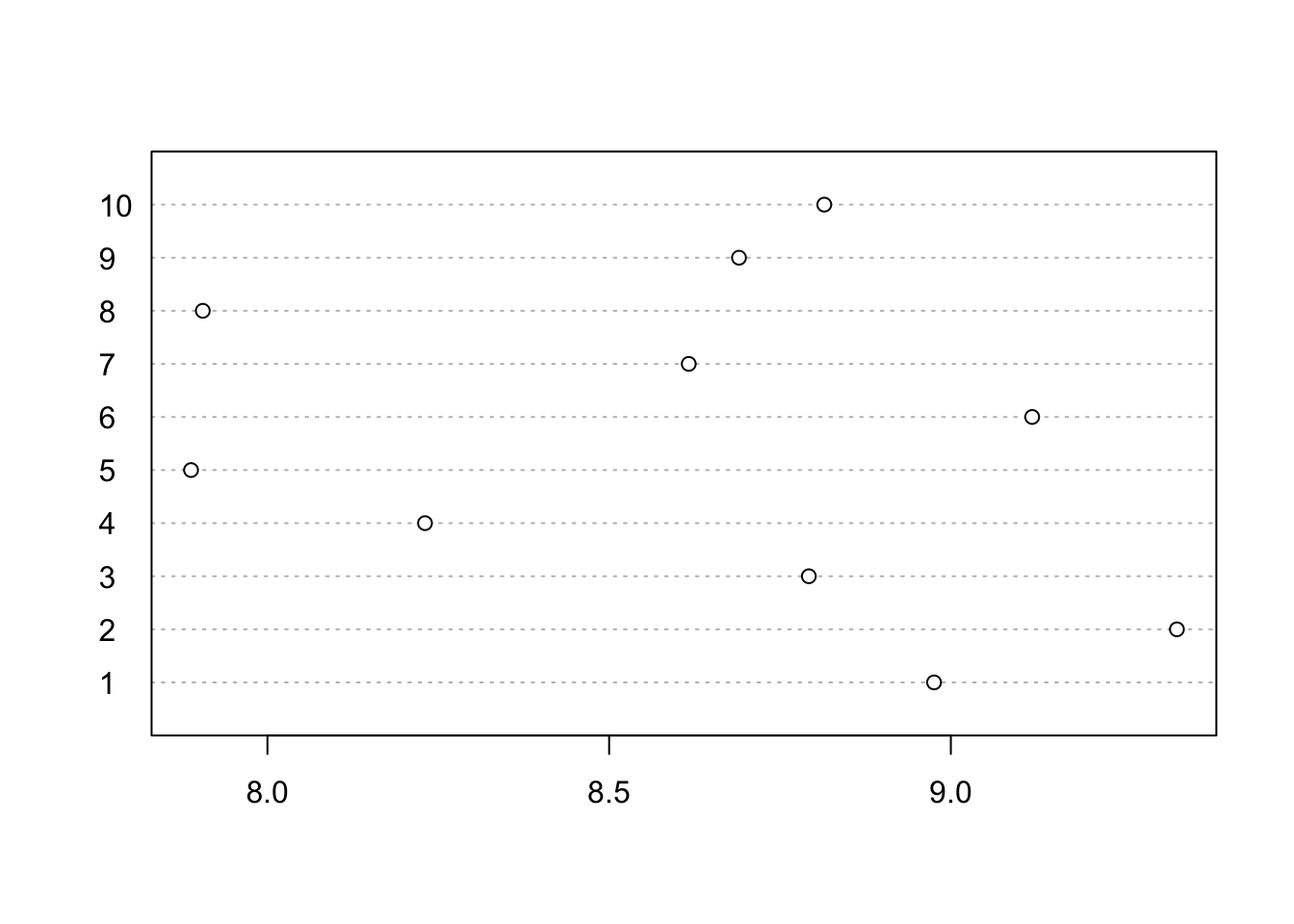

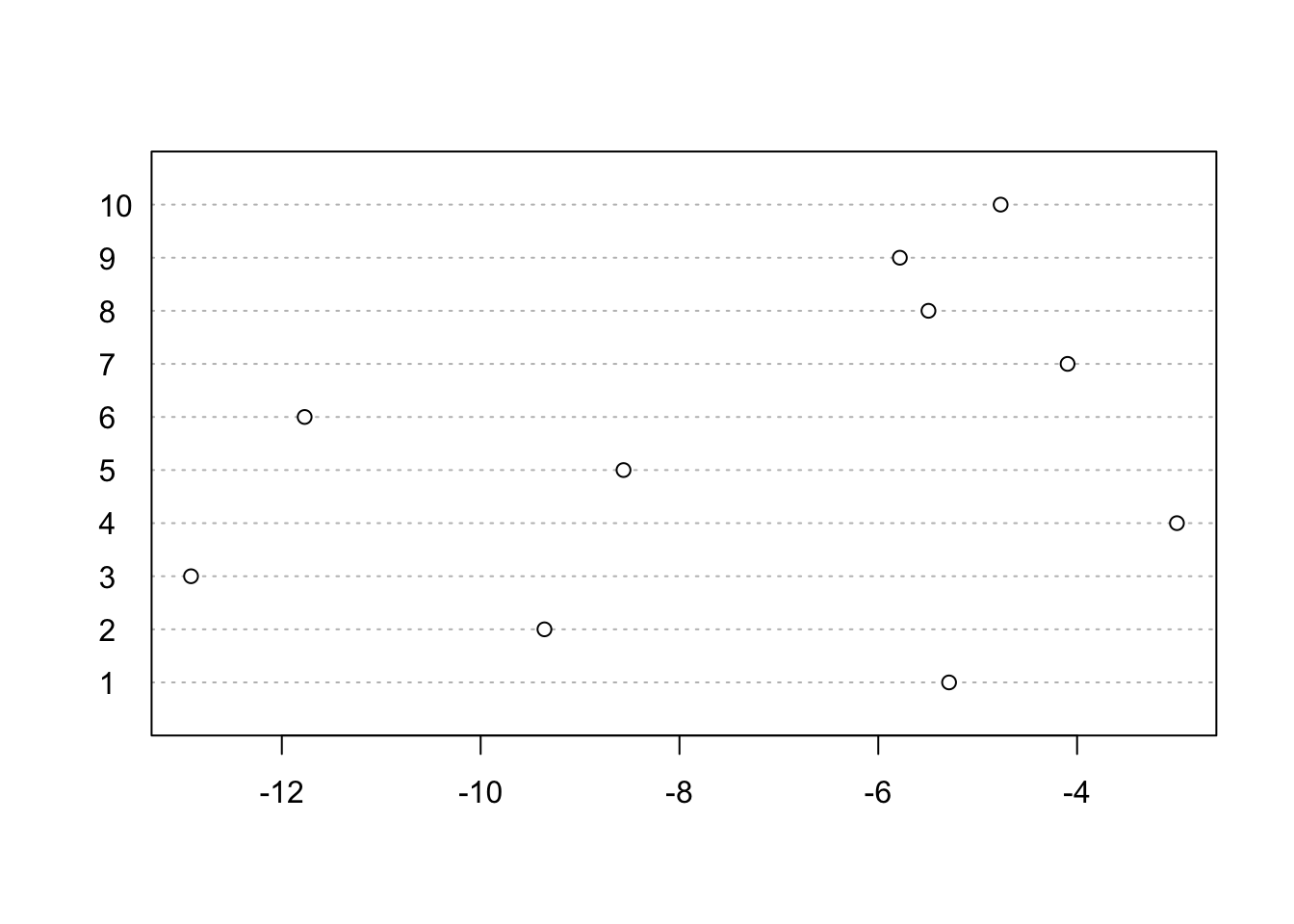

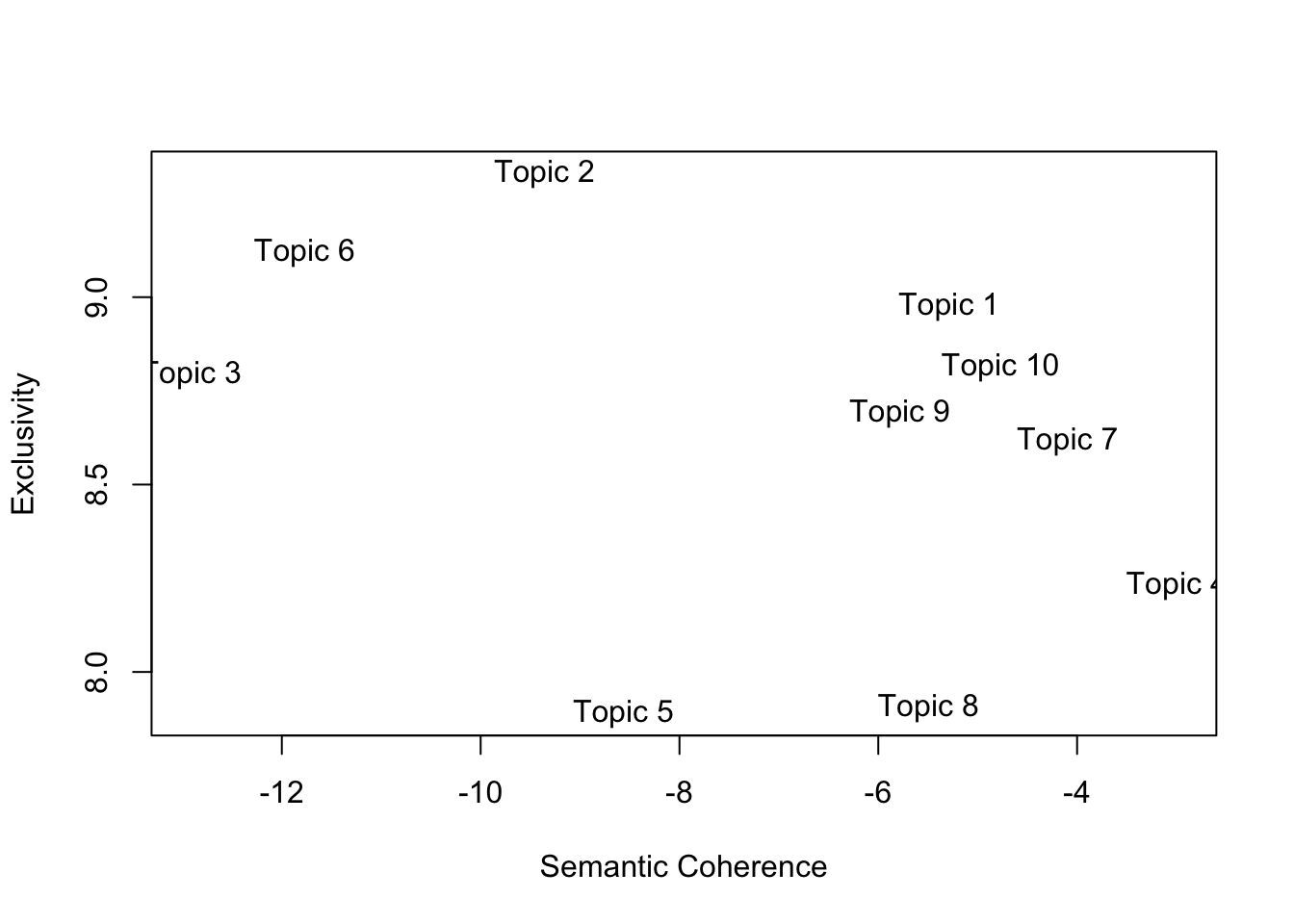

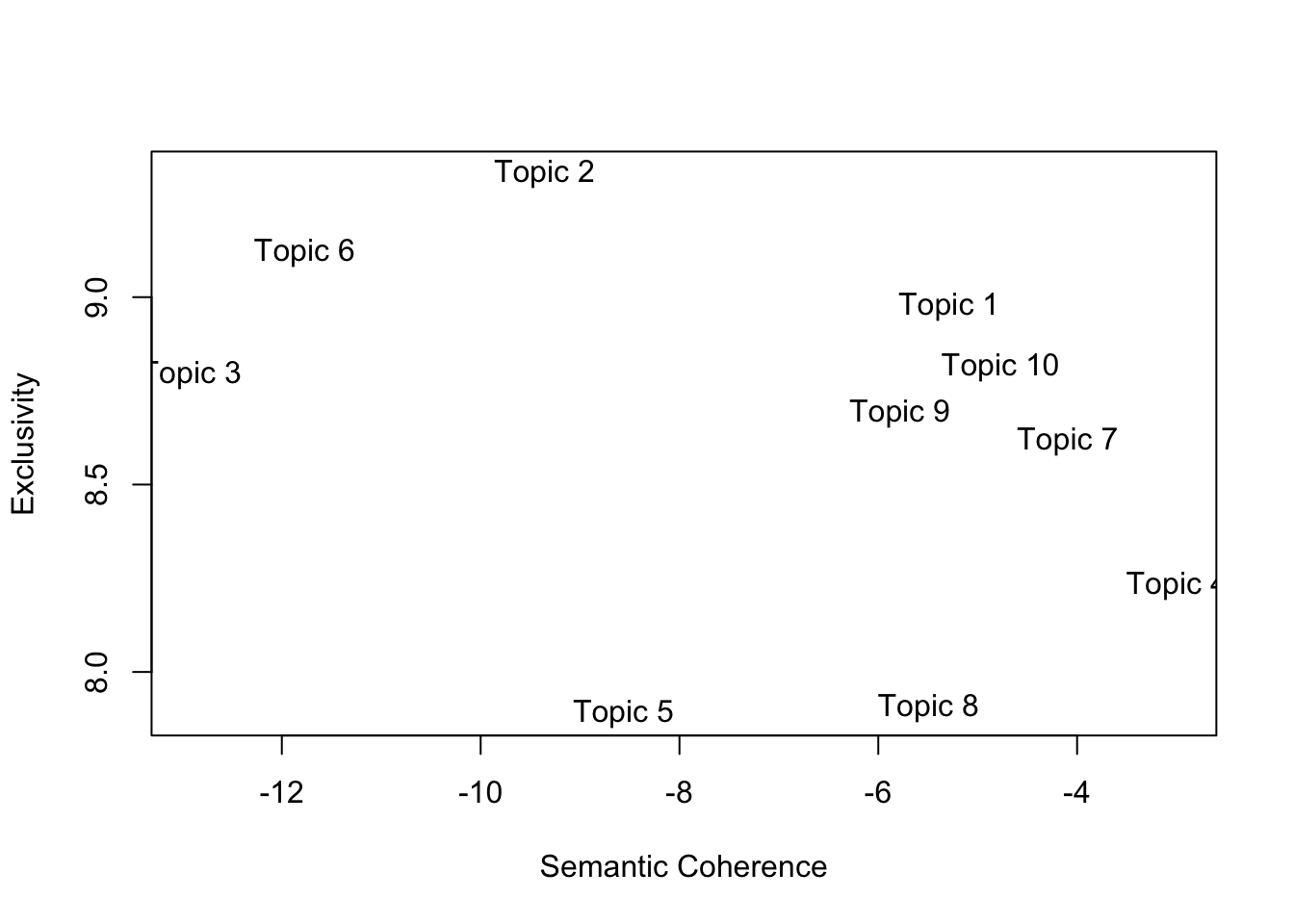

We can (should/must) run some diagnostics. There are two qualities that were are looking for in our model: semantic coherence and exclusivity. Exclusivity is based on the FREX labeling matrix. Semantic coherence is a criterion developed by Mimno et al. (2011) and it maximizes when the most probable words in a given topic frequently co-occur together. Mimno et al. (2011) show that the metric correlates well with human judgement of topic quality. Yet, it is fairly easy to obtain high semantic coherence so it is important to see it in tandem with exclusivity. Let’s see how exclusive are the words in each topic:

dotchart(exclusivity(stm_us_pres), labels = 1:10)

We can also see the semantic coherence of our topics –words a topic generates should co-occur often in the same document–:

dotchart(semanticCoherence(stm_us_pres,dfm_us_pres), labels = 1:10)

We can also see the overall quality of our topic model:

topicQuality(stm_us_pres,dfm_us_pres)## [1] -5.287875 -9.358241 -12.913601 -2.995819

## [5] -8.562729 -11.770514 -4.095783 -5.495206

## [9] -5.782951 -4.769013

## [1] 8.975480 9.330844 8.792229 8.230546 7.888169

## [6] 9.119047 8.616511 7.905410 8.690023 8.814819

On their own, both metrics are not really useful (what do those numbers even mean?). They are useful when we are looking for the “optimal” number of topics.

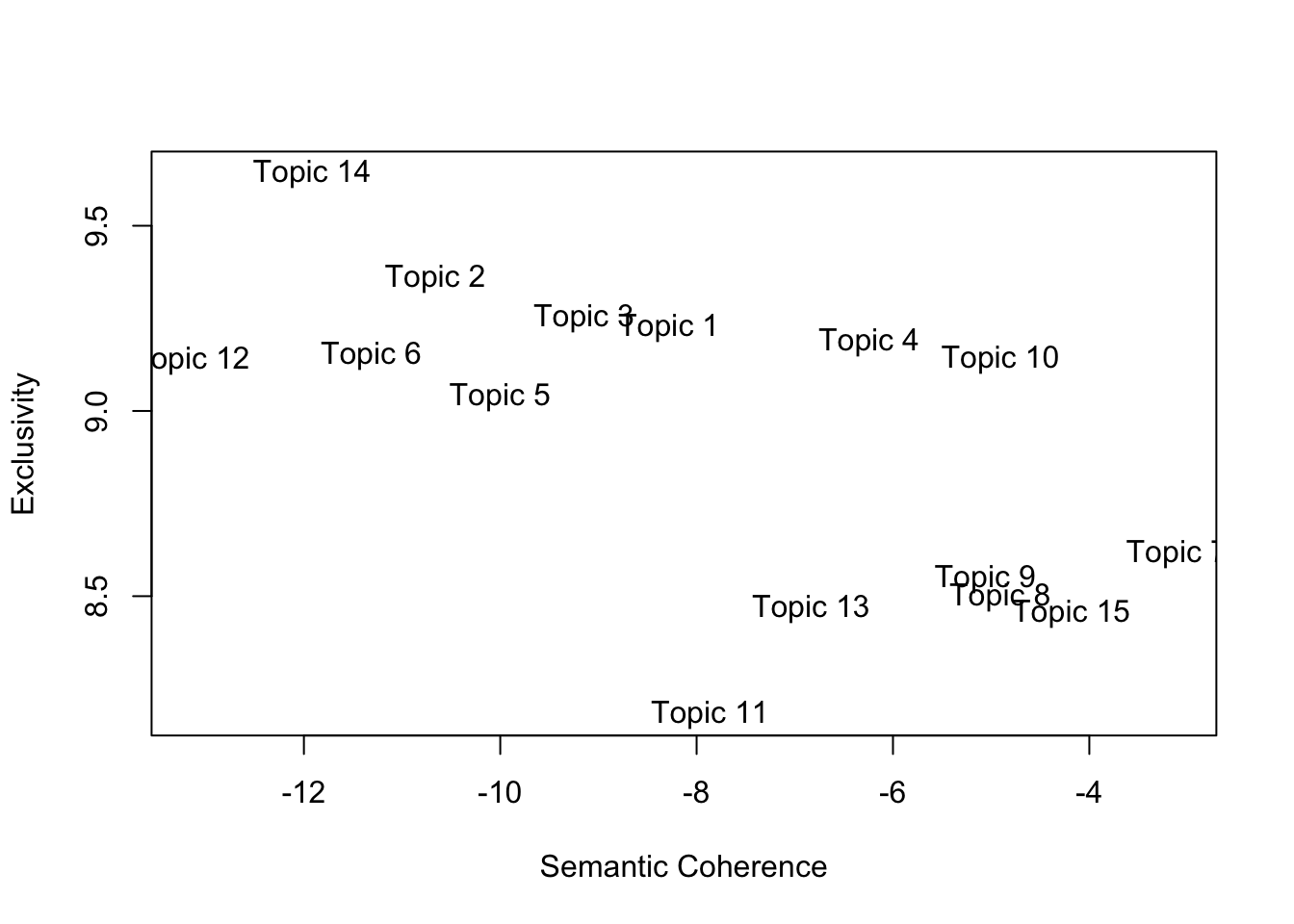

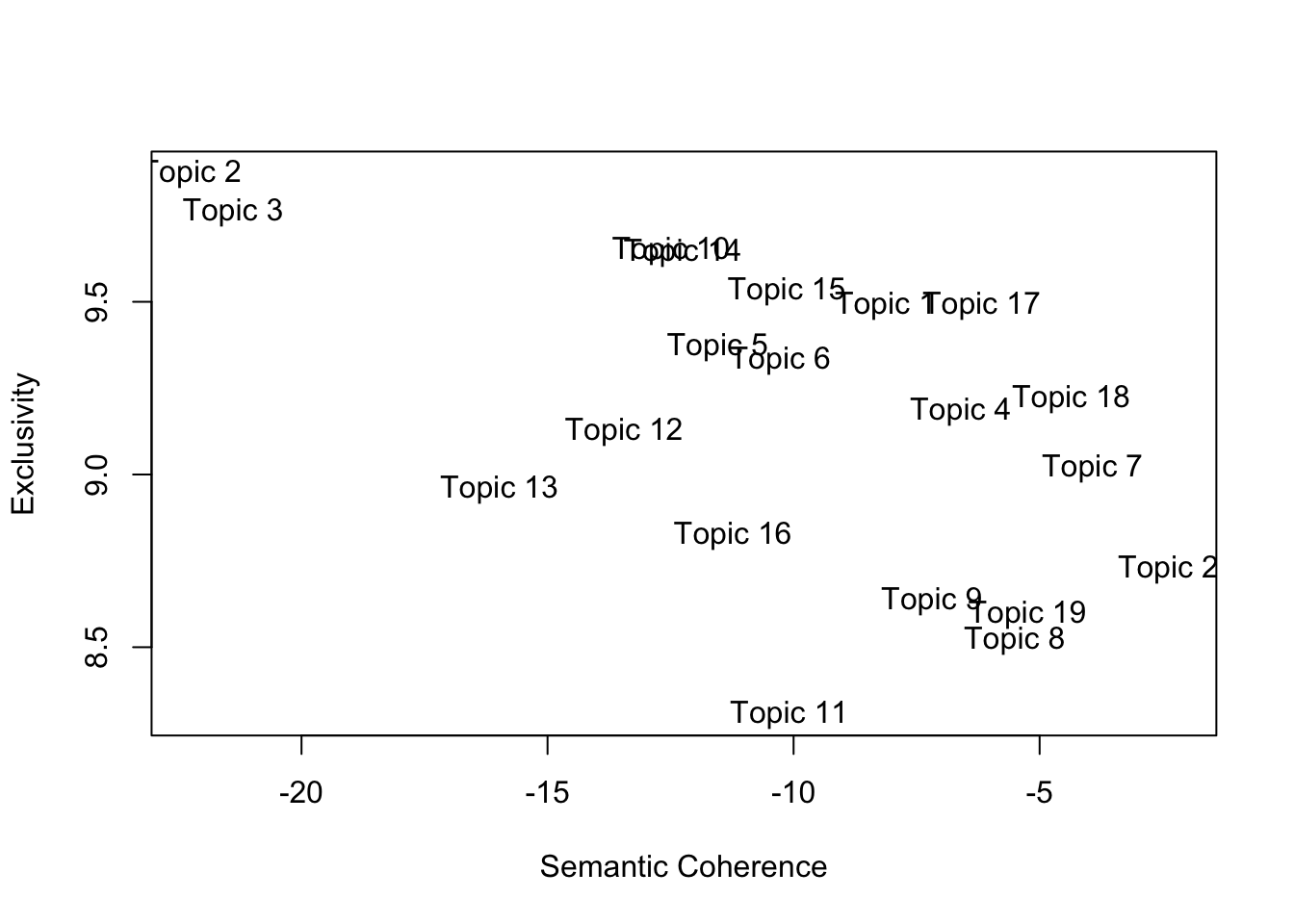

stm_us_pres_10_15_20 <- manyTopics(dfm_us_pres,

prevalence = ~ party,

K = c(10,15,20), runs=2,

# max.em.its = 100,

init.type = "Spectral") # It takes around 250 iterations for the model to converge. Depending on your computer, this can take a while.We can now compare the performance of each model based on their semantic coherence and exclusivity. We are looking for high exclusivity and high coherence (top-right corner):

k_10 <- stm_us_pres_10_15_20$out[[1]] # k_10 is an stm object which can be explored and used like any other topic model.

k_15 <- stm_us_pres_10_15_20$out[[2]]

k_20 <- stm_us_pres_10_15_20$out[[3]]

# I will just graph the 'quality' of each model:

topicQuality(k_10,dfm_us_pres)## [1] -5.287875 -9.358241 -12.913601 -2.995819

## [5] -8.562729 -11.770514 -4.095783 -5.495206

## [9] -5.782951 -4.769013

## [1] 8.975480 9.330844 8.792229 8.230546 7.888169

## [6] 9.119047 8.616511 7.905410 8.690023 8.814819

topicQuality(k_15,dfm_us_pres)## [1] -8.282551 -10.661122 -9.146329 -6.243444

## [5] -10.002100 -11.315179 -3.107797 -4.907182

## [9] -5.059424 -4.905652 -7.864316 -13.149897

## [13] -6.834348 -11.917696 -4.182962

## [1] 9.224913 9.358942 9.252240 9.185552 9.037701

## [6] 9.150431 8.614509 8.496738 8.546778 9.138797

## [11] 8.182268 9.136596 8.467905 9.641939 8.453004

topicQuality(k_20,dfm_us_pres)## [1] -8.136428 -22.245476 -21.390006 -6.602534

## [5] -11.543624 -10.272049 -3.923380 -5.506620

## [9] -7.188791 -12.486262 -10.086060 -13.443443

## [13] -15.978725 -12.256070 -10.137597 -11.231218

## [17] -6.177453 -4.358259 -5.246579 -2.209688

## [1] 9.488914 9.872405 9.761287 9.184162 9.370479

## [6] 9.330506 9.018598 8.520731 8.634212 9.649024

## [11] 8.306973 9.125545 8.958623 9.644274 9.532977

## [16] 8.823851 9.488908 9.220082 8.595757 8.726901

Maybe we have some theory about the difference in topic prevalence across parties. We can see the topic proportions in our topic model object:

head(stm_us_pres$theta)## [,1] [,2] [,3]

## [1,] 0.0001979850 9.251664e-05 8.228013e-05

## [2,] 0.0004943565 6.848981e-05 9.820101e-01

## [3,] 0.0002944155 4.882956e-05 9.988036e-01

## [4,] 0.1142592136 9.390427e-04 8.766105e-01

## [5,] 0.0114556926 2.301045e-04 6.716342e-03

## [6,] 0.0253504520 3.633666e-04 4.721880e-03

## [,4] [,5] [,6]

## [1,] 1.374029e-04 0.0003064405 0.0001963620

## [2,] 1.268903e-04 0.0165642826 0.0001660826

## [3,] 1.888195e-05 0.0005382537 0.0000637886

## [4,] 2.738605e-04 0.0030665368 0.0007906834

## [5,] 1.151595e-03 0.9771020843 0.0005254018

## [6,] 1.752615e-03 0.9609463741 0.0007236007

## [,7] [,8] [,9]

## [1,] 1.252948e-04 9.985702e-01 1.878275e-04

## [2,] 1.833598e-04 2.357889e-04 9.220995e-05

## [3,] 5.100825e-05 6.868126e-05 6.709721e-05

## [4,] 8.990925e-04 7.088244e-04 1.080922e-03

## [5,] 4.726644e-04 8.332618e-04 8.167810e-04

## [6,] 6.685461e-04 1.759546e-03 1.313855e-03

## [,10]

## [1,] 1.037197e-04

## [2,] 5.844376e-05

## [3,] 4.546202e-05

## [4,] 1.371326e-03

## [5,] 6.960722e-04

## [6,] 2.399765e-03Note that the prevalence terms \(\theta\) will add to 1 within a document. That is, the term tells us the proportion of (words associated with) topics for each document:

sum(stm_us_pres$theta[1,])## [1] 1

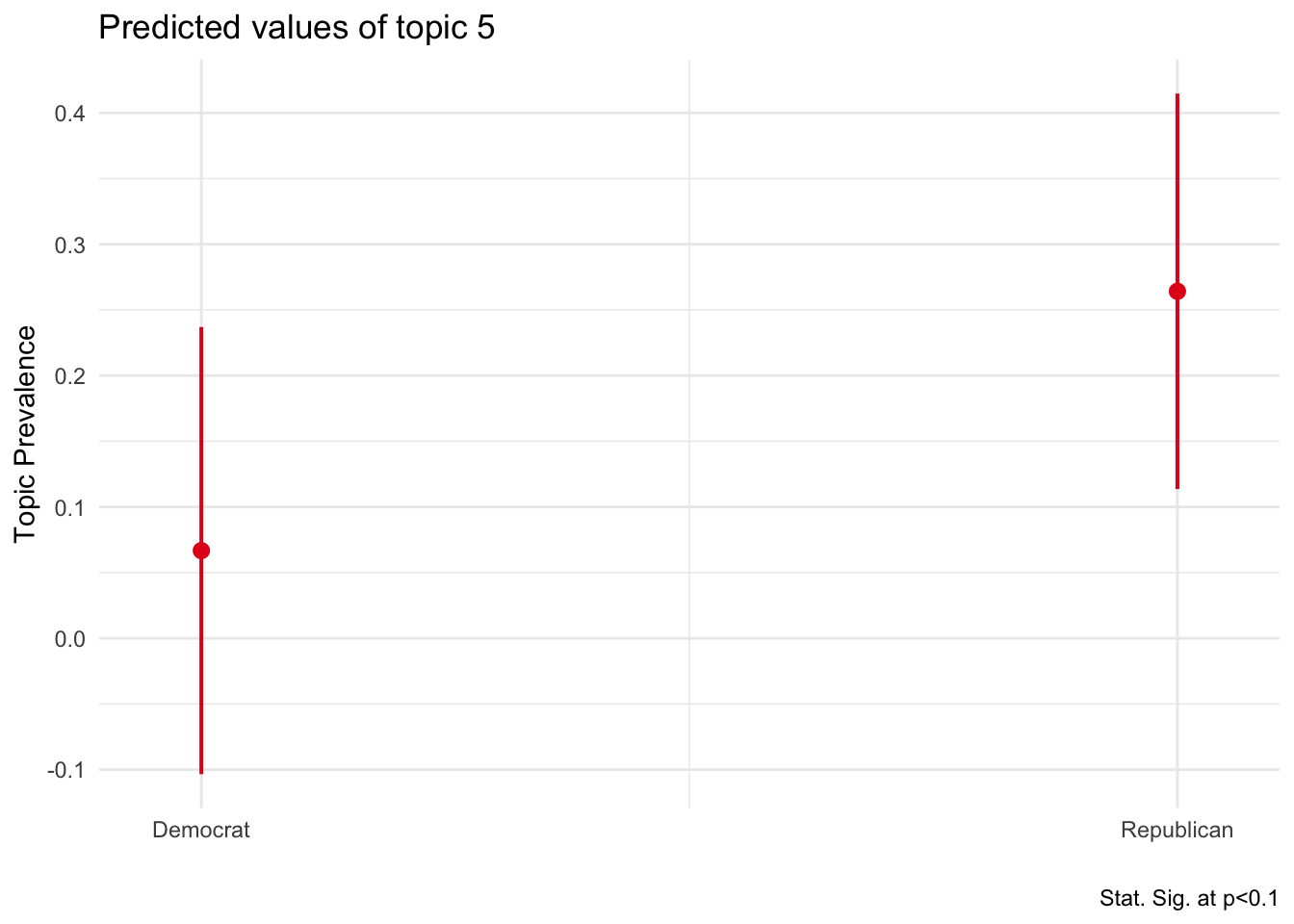

sum(stm_us_pres$theta[2,])## [1] 1What about connecting this info to our dfm and seeing if there are differences in the proportion topic 5 (economy) is addressed by each side.

## #refugeeswelcome

us_pres_prev <- data.frame(topic5 = stm_us_pres$theta[,5], docvars(dfm_us_pres))

feols_topic5 <- feols(topic5 ~ party , data = us_pres_prev)

plot_model(feols_topic5, type = "pred", term = "party") +

theme_minimal() +

labs(caption = "Stat. Sig. at p<0.1", x="", y="Topic Prevalence")## Some of the focal terms are of type

## `character`. This may lead to unexpected

## results. It is recommended to convert these

## variables to factors before fitting the

## model.

## The following variables are of type

## character: `party`

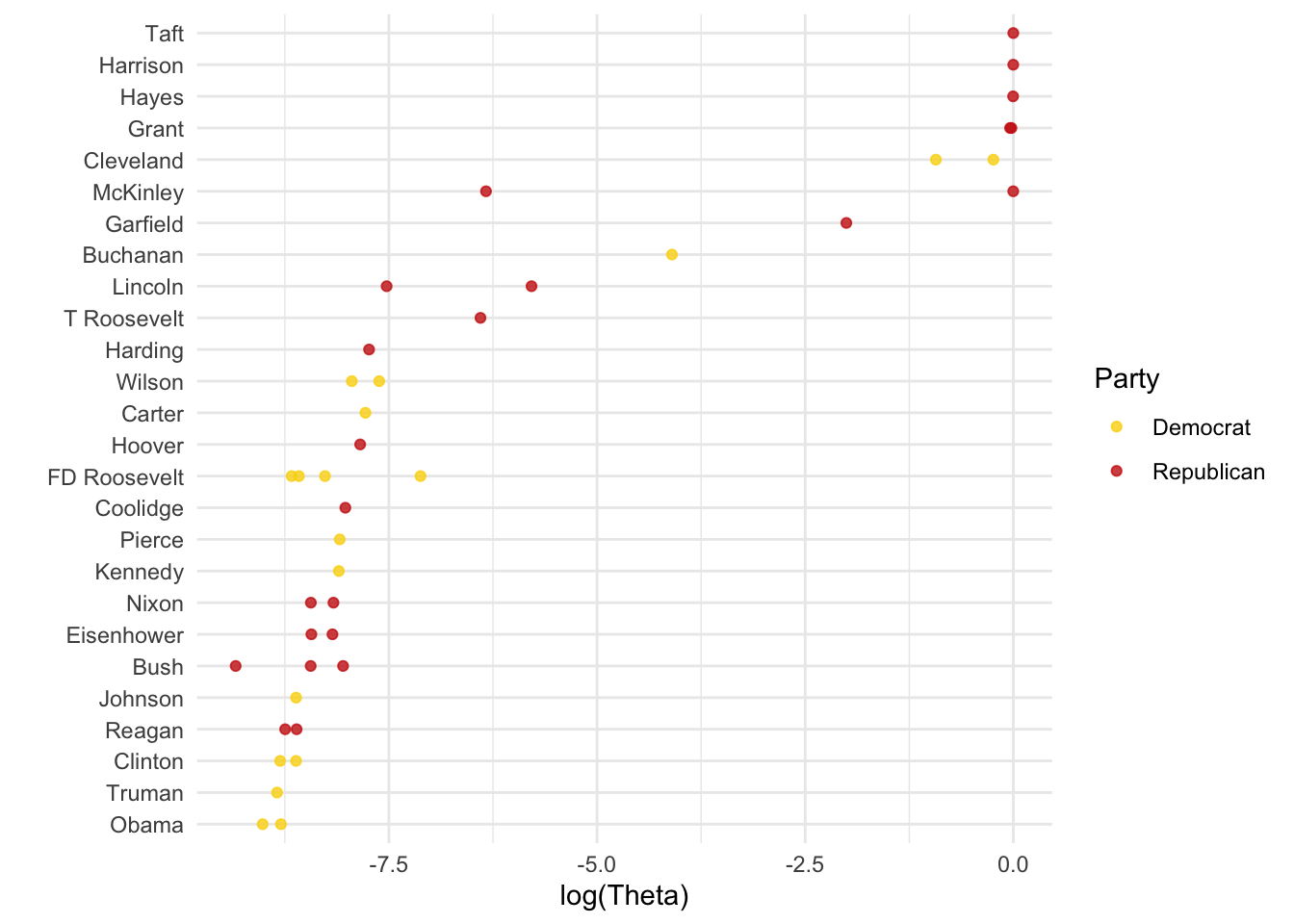

Seems that Republican presidents address more the economy in their speeches. Let’s plot the proportion of speeches about the economy by president:

us_pres_prev %>%

# Going to log the prev of topic 5 because is quite skewed but you should probably leave as is if you want to explore how topics are addressed.

ggplot(aes(x = log(topic5), y = reorder(President,topic5), color = party)) +

geom_point(alpha = 0.8) +

labs(x = "log(Theta)", y = "", color = "Party") +

scale_color_manual(values = wes_palette("BottleRocket2")) +

theme_minimal()

We can do something similar with the stm function directly. We just need to specify the functional form and add the document variables.

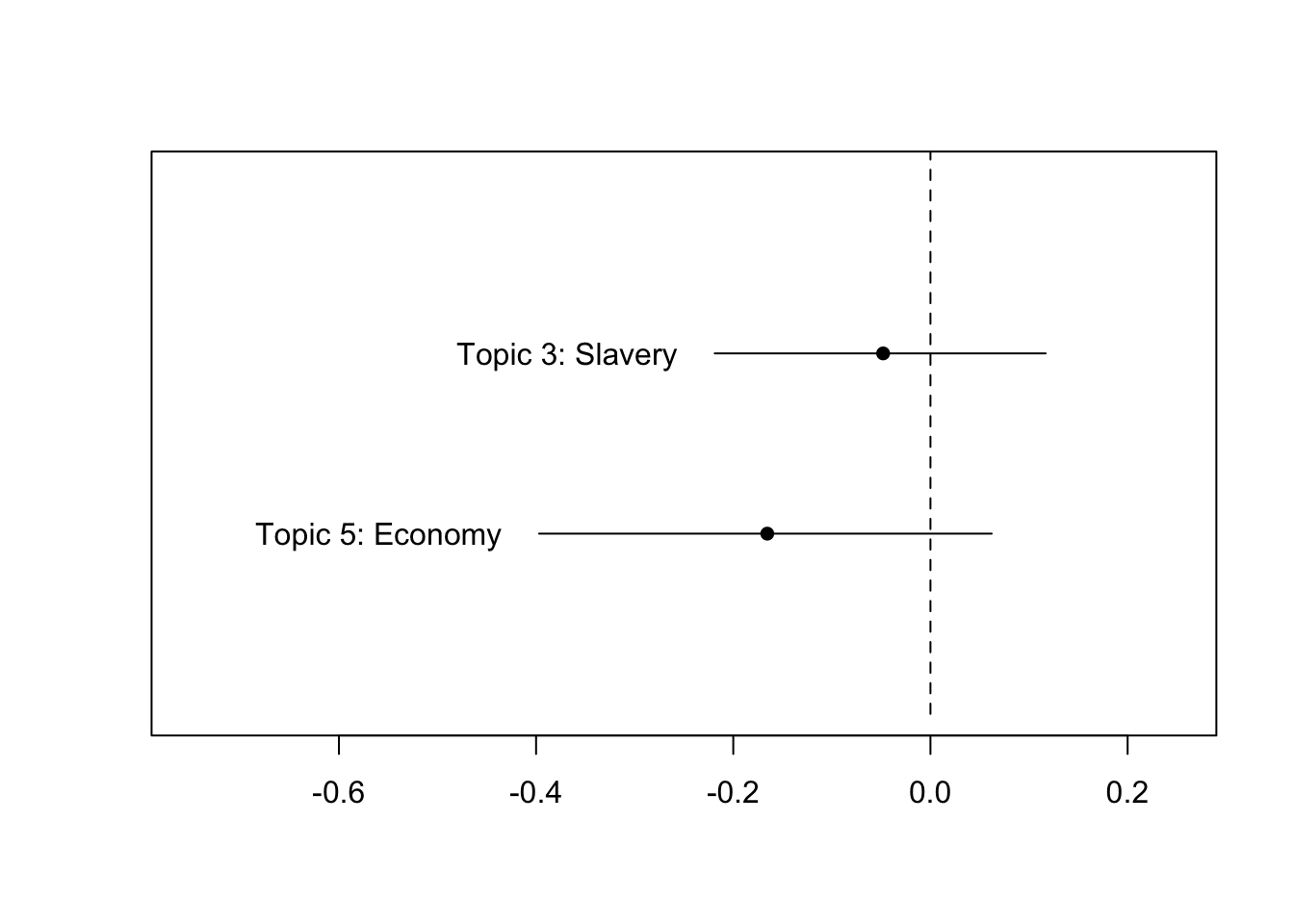

topics_us_pres <- estimateEffect(c(3,5) ~ party, stm_us_pres, docvars(dfm_us_pres)) # You can compare other topics by changing c(6,9).

plot(topics_us_pres, "party", method = "difference",

cov.value1 = "Democrat",

cov.value2 = "Republican",

labeltype = "custom",

xlim = c(-.75,.25),

custom.labels = c('Topic 3: Slavery', 'Topic 5: Economy'),

model = stm_us_pres)

Same results, Republicans mention more Topic 5: Economy.